Archive for the ‘BPM’ Category

March Events

March Events

Here are the SOA, BPM, and EA events coming up in March. If you want your events to be included, please send me the information at soaevents at biske dot com. I also try to include events that I receive in my normal email accounts as a result of all of the marketing lists I’m already on. For the most up to date list as well as the details and registration links, please consult my events page. This is just the beginning of the month summary that I post to keep it fresh in people’s minds.

- 3/3: ZapThink Practical SOA: Pharmaceutical and Health Care

- 3/4: Webinar: Implementing Information as a Service

- 3/6: Global 360/Corticon Seminar: Best Practices for Optimizing Business Processes

- 3/10 – 3/13: OMG / SOA Consortium Technical Meeting, Washington DC

- 3/10: Webinar: Telelogic Best Practices in EA and Business Process Analysis

- 3/11: BPM Round Table, Washington DC

- 3/12 – 3/14: ZapThink LZA Bootcamp, Sydney, Australia

- 3/13: Webinar: Information Integrity in SOA

- 3/16 – 3/20: DAMA International Symposium, San Diego, CA

- 3/18: ZapThink Practical SOA, Australia

- 3/18: Webinar: BDM with BPM and SOA

- 3/19: Webinar: Pega, 5 Principles for Success with Model-Driven Development

- 3/19: Webinar: AIIM Webinar: Records Retention

- 3/19: Webinar: What is Business Architecture and Why Has It Become So Important?

- 3/19: Webinar: Live Roundtable: SOA and Web 2.0

- 3/20: Webinar: Best Practices for Building BPM and SOA Centers of Excellence

- 3/24: Webinar: Telelogic Best Practices in EA and Business Process Analysis

- 3/25: ZapThink Practical SOA: Governance, Quality, and Management, New York, NY

- 3/26: Webinar: AIIM Webinar: Proactive eDiscovery

- 3/31 – 4/2: BPM Iberia – Lisbon

So much workflow, so little time

So much workflow, so little time

If your organization has begun to leverage workflow technologies as built into the typical BPM suite, you’ve probably run into one of the key challenges: when to use the BPM’s workflow engine versus the workflow capabilities of any of the other products you have in your enterprise. There’s no shortage of products that have built in workflow capabilities. Think about it. Most document management products have workflow capabilities (albeit typically associated with document approval). Your portal may have workflow capabilities. Your SDLC tools may include workflow for bug tracking. Your operations group may leverage a ticketing/work management system with workflow capabilities. So what’s the enterprise to do?

One of things that I’ve advised in the past is to consider whether or not the workflow involved requires any customization or not. Take the SDLC tooling as an example. While there’s typically some ability to change some of the data that flows through the process, the workflow itself probably doesn’t vary much from organization to organization. As a different example, imagine the workflow that supports the procurement and installation of new servers. While most organizations probably support this through a service request system, the odds are that the sequencing of tasks will vary widely from organization to organization. So, the need for customization is one factor. Unfortunately, it’s not the only one. You also have to consider the data element. Any workflow is going to have some amount of contextual information that’s carried throughout. So, while there may be a big need for process customization, that may be offset by the contextual information and schemas that may be provided as part of a tailored third party product.

All in all, the decision on when to use a general purpose workflow engine and when to use a tailored product is no different than the decisions an organization makes on when to build solutions in house versus when to buy packaged solutions. Look at the degree of customization you need in the workflow versus the pre-defined processes. Look at the work that will involved in setting up your own custom data stores and schemas versus the pre-defined databases. Ultimately, I think all large organizations will have a mixture of both. Unfortunately, that means some short term pain. All workflow systems typically have task management with them. Multiple tools means multiple task managers. Multiple task managers means multiple places to do your work. This isn’t exactly efficient, but, until we have standard ways to publish tasks to a universal task list and other standards associated with the use of workflow engines, we should strive to make good decisions on a process-by-process basis.

February Events

February Events

Here are the SOA, BPM, and EA events coming up in February. If you want your events to be included, please send me the information at soaevents at biske dot com. I also try to include events that I receive in my normal email accounts as a result of all of the marketing lists I’m already on. For the most up to date list as well as the details and registration links, please consult my events page. This is just the beginning of the month summary that I post to keep it fresh in people’s minds.

- 2/4 – 2/6: Gartner BPM Summit

- 2/5: ZapThink Practical SOA: Energy and Utilities

- 2/7 – 2/8: Forrester’s Enterprise Architecture Forum 2008

- 2/11: Web Services on Wall Street

- 2/13 – 2/15: ARIS ProcessWorld

- 2/13: ZapThink Webinar: Leverage Document Centric SOA for Competitive Advantage

- 2/19: Webinar: Integrated SOA Governance

- 2/25 – 2/28: BPTG’s Business Process Transformation – BPM Practitioner Course

- 2/25 – 2/27: Global Excellence Awards in BPM & BPM Technology Showcase

- 2/26 – 2/29: ZapThink LZA Bootcamp

Gaining Visibility

Gaining Visibility

While I always take vendor postings with a grain of salt, David Bressler of Progress Software had a very good post entitled, “We’re Running Out of Words II.” Cut through the vendor-specific stuff, and the message is that routing all of your requests through a centralized process management hub in order to gain visibility may not be a good thing. In his example, he tells the story of a company that took some existing processes and, in order to gain visibility into the execution of the process steps, externalized the process orchestration into a BPM tool. In doing so, the performance of the overall process took a big hit.

To me, scenarios like this are indicative of one major problem: we don’t think about management capabilities. Solution development is overwhelmingly focused on getting functional capabilities out the door. Obviously, it should be, but more often than not there is no instrumentation. How can we possibly claim that a solution delivers business value over time if there is no instrumentation to provide metrics? Independent of whether external process management is involved, gateway-based interception is used versus agent-based approaches, etc. we need to begin with the generation of metrics. If you’re not generating metrics, you’re going to have poor, or even worse, no visibility.

Unfortunately, all too often, we’re only focused on problem management and resolution, and the absence of metrics only comes to light if something goes wrong and we need to diagnose the situation. To this, I come back to my earlier statement. How can we have any confidence in saying that things are working properly and providing value without metrics?

Interestingly, once you have metrics, the relationships (and potentially collisions) between the worlds of enterprise system management, business process management, web service management, business activity monitoring, and business intelligence start to come together, as I’ve discussed in the past. I’ve seen one company take metrics from a BPM tool and placing them into their data warehouse for analysis and reporting from their business intelligence system. Ultimately, I’d love to see a differentiation based upon what you do with the data, rather than on the mere ability to collect the data.

More on Events

More on Events

Ok, the events page is finally functional. I gave up on WordPress plugins, and am now leveraging an embedded (iframe) Google Calendar. A big thank you to Sandy Kemsley, as she already had a BPM calendar which I’m now leveraging and adding in SOA and EA events. I hope this helps people to find out the latest on SOA, EA, and BPM events.

SOA, EA, and BPM Conferences and Events

SOA, EA, and BPM Conferences and Events

I’ve decided to conduct an experiment. In the past, I’ve been contacted about posting information regarding events on SOA, either via a comment to an existing post, or through an email request. I’ve passed on these, but at the same time, I do like the fact that I’ve built up a reader base and my goal has always been to increase the knowledge of others on SOA and related topics.

What I’ve decided to try is having one post per month dedicated to SOA events that will be occuring in the near future, in addition to this permanent page on the blog that people can review when they please. I’m not about to go culling the web for all events, but I will collect all events that I receive in email at soaevents at biske dot com, and post a summary of them including dates, topic, discount code, and link to the detail/registration page. Readers would be free to post additional events in comments, however, I don’t think very many people subscribe to the comment feed, so the exposure would be less than having it in my actual post. I’m going to try to leverage a Google Calendar (iCal, HTML) for this, which will also be publicly available, even if someone wants to include it their own blog.

While this is essentially free marketing, in reality, I’d make a few more cents by having more visitors to this site than I would if all of these events organizers threw their ads into the big Google Ad Pool with the hopes of it actually showing up on my site. If I get a decent number of events from multiple sources the first two months, I’ll probably keep it up. If I only get events from one source, I’ll probably stop, as it will begin to look like I’m doing marketing just for them and I don’t want anyone to have the perception that my content is influenced by others.

The doors are open. Send your SOA, EA, and BPM events to me at soaevents at biske dot com. Include:

- Date/Time

- Subject/Title

- Description (keep it to one or two sentences)

- URL for more detail/registration

- Discount code, if applicable

If you want to simply send an iCal event, that would probably work as well, and it would make it very easy for me to move it into Google Calendar. My first post will be on Feb. 1, the next on March 1, and so on. I will post events for the current month and the next month, and may include April depending on how many events I receive.

Gartner AADI: Context-Oriented Architecture

Gartner AADI: Context-Oriented Architecture

While I didn’t attend the later session on it, in the opening keynote of the AADI Summit on Monday, the term “Context-Oriented Architecture” was mentioned. A colleague (thanks Craig!) caught me in the hall and asked me what my thoughts were on it, and as usual, my brain starting noodling away and the end result was me leaving the conversation saying, “you’ll see a blog entry on this very soon!”

I’ve brought up the slides from the Gartner session on it, and they estimate that sometime in the 2010’s, we will enter the “Era of Context” where important factors are presence, mobility, web 2.0 concepts, and social computing. The slides contain a new long acronym, WYNIWYG (Winnie Wig?), which stands for “What You Need is What You Get.” While it seems that the Gartner slides emphasize the importance that mobility will have in this paradigm, I’d like to bring it back into the enterprise context. While mobility is very important, there is still a huge need for WYNIWYG concepts in the desktop context. There will be no shortage of workers who still commute to the office building each day with the computer in their cube or their desktop being their primary point of interaction with the technology systems.

I think the WYNIWYG acronym captures the goal: what you need is what you get. The notion of context, however, implies that what you need changes frequently within a given day. Keith Harrison-Broninski, in his book Human Interactions, discusses how a lot of what we do is driven by the role we are playing at that point in time. If we take on multiple roles during the course of our day, shouldn’t we have context-sensitive interfaces that reflect this? If you’re asking the question, “Isn’t this the same thing as the personalization wave that went in (and out?) with web portals?” I want to make a distinction. Context-sensitivity has to be about productivity gains, not necessarily about user satisfaction gains. Allowing a user to put a “skin” on something or other look and feel tweaks may increase their overall level of satisfaction, but it may not make them any more productivity (I’m not implying that look and feel was the only thing that the personalization wave was about, but there was certainly a lot of it). As a better example of context-sensitivity, I point to the notion of Virtual Desktops. This has been around since the days of X Windows, with the most recent incarnation of it being Apple’s Spaces technology within Leopard. With this approach, I can put certain windows on “Virtual desktops” rather than have all of them clutter up a single desktop. With a keystroke, I can switch between them. So, a typical developer may have one “desktop” that has Eclipse open and maximized, and another “desktop” that has Outlook or your favorite mail client of choice, etc. Putting them all in one creates clutter, and the potential for interruptions and productivity losses when I need to shift (i.e. context-shift) from coding to responding to email.

Taking this beyond the developer, I bring in the advent of BPM and Workflow technologies. I’ve blogged previously on how I think this will create a need for very lightweight, specific-purpose user interfaces. Going a step further, these entry points should all be context-sensitivie. I’m doing this particular task, because I’m currently playing this role. Therefore, somehow, I need to have an association between a task and a role, and the task manager on my desktop needs to be able to interact with the user interaction container (not any one specific user interface, but rather a collection of interfaces) in a context-sensitive manner to present what I need. In our discussion, he brought up an example of an employee directory. An employee directory itself probably doesn’t need to be context aware. What does need to be context-aware is the presence or lack thereof of the employee directory depending on the role I’m currently playing. Therefore, it’s the UI container that must be context-sensitive.

All in all, this was a very interesting discussion. In looking at the Gartner slides, I definitely agree that this is a 2010+ sort of thing, but if you’re in a position to jump out (way) ahead of the curve, there’s probably some good productivity gains waiting to be had. I recommend getting pretty comfortable with your utilization of BPM technology first, and then moving on to this “era of context.”

Test Driven Model Development

Test Driven Model Development

I had a great conversation with a colleague (thanks Andy) recently regarding BPM technology and the software development lifecycle. We were lamenting the fact that whenever a new development paradigm comes along, we run the risk of taking one (or more likely many) steps backward in our SDLC technologies.

Take, for example, test driven development (TDD). (Aside: I enjoyed the latest ARCast on TDD from Ron Jacobs.) How do you unit test when doing model driven development as is the case in most BPM tools? What are the appropriate “units” to test? For that matter, how does a continuous integration model work? Can the models be stored in a source code management system like Java or C# code? Can I run automated builds? Does the concept of a “build” even apply anymore? I have a hard time believing that the use of these new model-driven tools somehow negates the need for testing and continuous integration. It would be great to hear more on how the best practices we’ve learned in writing large systems in Java and C# now apply when programming through pictures from some of the vendors in the space. I don’t know whether any of the vendors have given any thought to this, but I have seen organizations that have run into some of these questions as they adopted BPM technologies. Anyone have some answers?

Update: InfoQ posted some similar thoughts under the heading, “Why do Java developers hate BPM?”

Driving SOA

Driving SOA

Jason Bloomberg of ZapThink, in their latest ZapFlash, put a new spin on their old concept of the SOA champion and put forth a job description for VP of SOA. While he certainly suggested a good base salary for the position, I question whether a permanent, executive position around SOA makes sense?

If you look at the job responsibilities listed, the question I ask is not whether these tasks are needed, but rather, whether a dedicated person is needed for all of them. Let’s look at a few of them:

Provide executive-level management leadership to all architecture efforts across the enterprise. The directors of Business Architecture, Enterprise Architecture, Technical Architecture, Data Architecture, and Network Architecture will all be your direct reports.

Don’t we already have this? It sounds like a Chief Architect to me.

Drive all Business Process Management (BPM) initiatives enterprisewide. Coordinate with process specialists across all lines of business, and drive architectural approaches to business process.

Again, to me, this sounds like the responsibility of the COO.

Establish and drive a SOA governance board that incorporates the existing IT governance board.

This is the only one that I simply disagree with. If we’re speaking in terms of IT governance as defined in Peter Weill’s book, I think the IT Governance Board should be factoring SOA strategy and governance into their decisions. That is, IT Governance subsumes SOA governance, not vice versa. Of course, there are also aspects of SOA governance that are implemented at a much lower level within projects to ensure consistency of service definitions, etc. Once again, however, this should be handled by the existing technology governance processes. We’re merely adding some additional criteria for them to be applying to projects.

Establish and lead the SOA Center of Excellence across the enterprise to pull together and establish core architectural best practices for the entire organization. Develop and enforce a mandate for conformance to such best practices.

This gets into the technical governance I just mentioned. I certainly agree that a SOA COE can be a good thing, but you certainly don’t need a new position just to manage in it. Why wouldn’t the Chief Architect or a delegate lead the COE as part of their day to day responsibilities?

Manage a budget that is not tied to individual line-of-business projects, but is rather earmarked for cross-departmental SOA/BPM initiatives that drive business value for the enterprise as a whole.

The question here is whether or not the current organizational structure prevents cross-departmental initiatives from being funded and managed properly. It implies that the individual LOBs will be more concerned about their own needs, and not that of the broader enterprise. If the corporate governance principles and goals have stated that the use of more shared, cross-cutting tehcnologies are needed, then it’s really a governance problem. While an organizational change can assist, you still need to ensure that LOB managers are making decisions in line with the corporate goals, rather than that of their individual LOBs.

Work closely with the VP of Project Management to insure close cooperation between architecture and project management teams, and to improve project management policies.

Once again, why can’t this be done by the existing architectural leadership?

There were additional items, but my thoughts kept coming back to “shouldn’t someone already be doing this?” If the answer to this is “no,” then you must ask yourself whether or not you’re really committed to SOA adoption. If you feel that you are, but don’t have anyone assigned to these responsibilities, does the creation of a new position make sense? For example, if the organization struggles with getting LOB managers to produce cross-departmental solutions, will throwing one more person into the mix fix the problem, or just add another point of view?

As with many of the ZapThink ZapFlashes, there’s always a bit of controvery but lots of goodness. In this case, the articulation of the responsibilites associated with managing SOA adoption are excellent. Do you need a new position to do it? As with any organizational decision, it depends. If you are committed to SOA, but can’t make it happen simply because all of the possible candidates are simply to busy to take on these new responsibilities, then it might make sense (although you’ll probably need to add staff elsewhere, too, if people really are that busy). If you’re trying to use the position to resolve competiting priorities where there isn’t universal agreement on what the right thing to do for the enterprise is, a new position may not resolve it.

Book Review: SOA and WS-BPEL

Book Review: SOA and WS-BPEL

I was recently sent a copy of “SOA and WS-BPEL” by Yuri Vasiliev and Packt Publishing for review. The book is subtitled, “Composing Service-Oriented Solutions with PHP and ActiveBPEL.” After reading the book, the subtitle is much more accurate than the primary title. The book is first and foremost a guide for constructing Web Services using the SOAP extension for PHP and building BPEL processes using ActiveBPEL. Secondarily, there is a discussion behind the principles of SOA and WS-BPEL. Clearly, the right audience for this book is the developer community. If you’re removed from day to day coding, the book may not be as valuable.

If you’re looking for hands-on examples, this book has plenty of them. It includes all of the building blocks necessary from building your first service in PHP to creating an orchestrated process using BPEL. I felt that there was more emphasis on the coding efforts than necessary, however, and not enough on some of the theory behind it. This was evident in some of the early examples. In the sections on PHP, the examples result in a service that stores an XML representation of a purchase order in a database. The examples in the book take the incoming XML, create a PHP array representation of the XML, then convert it back to a DOM representation for storage in the database. While I do not know whether this approach was due to a limitation of the SOAP extensions, as an architect, it left me shaking my head. If the service is simply a pass-through to a database, there’s no reason to take all the time to parse the XML, bind it to some internal data structure, and then turn that internal data structure back into XML. Continuing on, the example then added XML schema validation to the mix, but it was performed by a stored procedure in the database. If you understand the role XML Schema validation has in security, odds are that XML schema validation will have occurred long before we even reach the back end database.

The section on WS-BPEL followed a similar vein. The bulk of the book was simply focused on walking you through the steps necessary to perform the actions in ActiveBPEL, rather than on building a sound understanding of WS-BPEL. There were pages upon pages of instructions on what menus to select, items to click, etc. I was very surprised at the lack of screenshots or graphical representations of the processes in the book. More often than not, they recommended using the Source tab in ActiveBPEL to compare the BPEL document in the book to what should have appeared after going through all the actions. My personal view on BPEL is that I don’t ever want to see it. I want to leverage the modeling capabilities of any BPM tool, and let the tool worry about BPEL behind the scenes.

Overall, for a book heavily focused on the developer community using PHP and ActiveBPEL (somewhat of a narrow audience, in my opinion), it’s certainly a good walkthrough to get your feet wet. It’s not going to give you the architectural skills you need, but it will move you through a lot of material very quickly. For people who are quick studies and just want some solid examples, you may find this a decent investment. For someone looking for more theory and architectural principles behind SOA and WS-BPEL, I’d probably look elsewhere.

Disclosure: This book was provided to me at no cost for the purposes of reviewing it. If you’re interested in having me review a book, please contact me at todd at biske dot com.

Composite Applications

Composite Applications

Brandon Satrom posted some of his thoughts on the need for a composite application framework, or CAF, on his blog and specifically called me out as someone from which he’d like to hear a response. I’ll certainly oblige, as inter-blog conversations are one of the reasons I do this.

Brandon’s posted two excerpts from the document he’s working on, here and here. The first document tries to frame up the need for composition, while the second document goes far deeper into the discussion around what a composite application is in the first place.

I’m not going to focus on the need for composition for one very simple reason. If we look at the definition presented in the second post, as well as articulated by Mike Walker in his followup post, composite applications are ones which leverage functionality from other applications or services. If this is the case, shouldn’t every application we build be a composite application? There are vendors out there who market “Composite Application Builders” which can largely be described as EAI tools focused on the presentation tier. They contain some form of adapter for third party applications, legacy systems, that allow functionality to be accessed from a presentation tier, rather than as a general purpose service enablement tool. Certainly, there are enterprises that have a need for such a tool. My own opinion, however, is that this type of an approach is a tactical band-aid. By jumping to the presentation tier, there’s a risk that these integrations are all done from a tactical perspective, rather than taking a step back and figuring out what services need to be exposed by your existing applications, completely separate from the construction of any particular user-facing application.

So, if you agree with me that all applications will be composite applications, then what we need is not a Composite Application Framework, but a Composition Framework. It’s a subtle difference, but it gets us away from the notion of tactical application integration and toward the strategic notion of composition simply being part of how we build new user-facing systems. When I think about this, I still wind up breaking it into two domains. The first is how to easily allow user-facing applications to easily consume services. Again, in my opinion, there’s not much different here than the things you need to do to make services easily consumable, regardless of whether or not the consumer is user-facing or not. The assumption needs to be that a consumer is likely to be using more than one service, and that they’ll have a need to share some amount of data across those services. If the data is represented differently in those services, we create work for the consumer. The consumer must translate and transform the data from one representation to one or more additional representations. If this is a common pattern for all consumers, this logic will be repeated over and over. If our services all expose their information in a consistent manner, we can minimize the amount of translation and transformation logic in the consumer, and implement it once in the provider. Great concept, but also a very difficult problem. That’s why I use the term consistent, rather than standard. A single messaging schema for all data is a standard, and by definition consistent, but I don’t think I’ll get too many arguments that coming up with that one standard is an extremely difficult, and some might say impossible, task.

Beyond this, what other needs are there that are specific to user-facing consumers? Certainly, there are technology decisions that must be considered. What’s the framework you use for building user-facing systems? Are you leveraging portal technology? Is everything web-based? Are you using AJAX? Flash? Is everything desktop-based using .NET and Windows Presentation Foundation? All of these things have an impact on how your services that are targeted for use by the presentation tier must be exposed, and therefore must be factored into your composition framework. Beyond this, however, it really comes down to an understanding of how applications are going to be used. I discussed this a bit in my Integration at the Desktop posts (here and here). The key question is whether or not you want a framework that facilitates inter-application communication on the desktop, or whether you want to deal with things in a point-to-point manner as they arise. The only way to know is to understand your users, not through a one-time analysis, but through continuous communication, so you can know whether or not a need exists today, and whether or not a need is coming in the near future. Any framework we put in place is largely about building infrastructure. Building infrastructure is not easy. You want to build it in advance of need, but sometimes gauging that need is difficult. Case in point: Lambert St. Louis International Airport has a brand new runway that essentially sits unused. Between the time the project was funded and completed, TWA was purchased by American Airlines, half of the flights in and out were cut, Sept. 11th happened, etc. The needs changed. They have great infrastructure, but no one to use it. Building an extensive composition framework at the presentation tier must factor in the applications that your users currently leverage, the increased use of collaboration and workflow technology, the things that the users do on their own through Excel, web-based tools, and anything else they can find, how their job function is changing according to business needs and goals, and much more.

So, my recommendations in this space would be:

- Start with consistency of data representations. This has benefits for both service-to-service integration, as well as UI-to-service integration.

- Understand the technologies used to build user-facing applications, and ensure that your services are easily consumable by those technologies.

- Understand your users and continually assess the need for a generalized inter-application communication framework. Be sure you know how you’ll go from a standard way of supporting point-to-point communication to a broader communication framework if and when the need becomes concrete.

Future of SOA Podcast available

Future of SOA Podcast available

I was a panelist for a discussion on the Future of SOA at The Open Group Enterprise Architecture Practitioner’s Conference in late July. The session was recorded and is now available as a podcast from Dana Gardner’s BriefingsDirect page. Please feel free to followup with me on any questions you may have after listening to it.

Acronym Soup

Acronym Soup

The panel discussion I was involved with at The Open Group Enterprise Architecture Practitioner’s Conference went very well, at least in my opinion. We (myself, our moderator Dana Gardner, Beth Gold-Bernstein, Tony Baer, and Eric Knorr) covered a range of questions on the future of SOA, such as when will we know we’re there, will we still be discussing it 5 years from now or will it be subsumed by EA as a whole, etc.

In our preparations for the panel, one of the topics that was thrown out there was how SOA will play with BPM, EDA, BI, etc. I should point out that our prep call only set the basic framework of what would be discussed, we didn’t script anything. It was quite difficult biting my tongue on the prep call as I wanted to jump right into the debate. Anyway, because it didn’t get the depth of discussion that I was expecting, I thought I’d post some of my thoughts here.

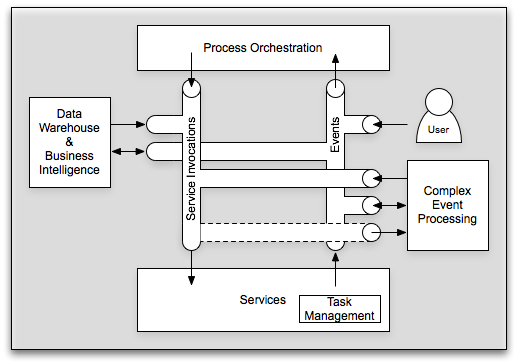

I’ve previously posted on the integration between SOA, BPM, Workflow, and EDA, or probably better stated, services, processes, and events. There are people who will argue that EDA is simply part of SOA, I’m not one of them, but that’s not a debate I’m looking to have here. It’s hard to argue that there are natural connections between services, processes, and events. I just recently posted on BI and SOA. So, it’s time to try to bring all of these together. Let’s start with a picture:

In its simplest form, I still like to begin with the three critical components: processes, services, and events. Services are explicitly invoked by sending a service invocation message. Processes are orchestrated through a sequence of events, whether human-generated or machine generated. Services can return responses, which in essence are a “special” event directed solely at the requestor, or they can publish events available for general listening. So, we’ve covered SOA, BPM, EDA, and workflow. To bring in the world of EDW (Enterprise Data Warehouse), BI (Business Intelligence), CEP (Complex Event Processing), and even BAM (Business Activity Monitoring, although not shown on the diagram), the key is using these messages for purposes other than which they were intended. CEP looks at all messages and is able to provide a mechanism for the creation of new events or service invocations based upon an analysis of the message flow. Likewise, take these same messages and let them flow into your data warehouse and allow your business intelligence to perform some complicated analytics on them. You can almost view CEP as a sort of analytical engine operating on a small window, while business intelligence can act as the analytical engine operating on a large window. Just with CEP, your EDW and BI system can (in addition to report) generate events and/or service invocations. Simply put, all of the technologies associated with all of these acronyms need to come together in a holistic vision. At the conference, Joe Hill from EDS pointed out that when many of these technologies solved 95% of the problem they were brought in for. Unfortunately, when your problem space is broadened to where it all needs to integrate, the laws of multiplication no longer apply. That is, if you have two solutions that solved 95% of their respective problems, they don’t solve 0.95 * 0.95 = 90.25% of the combined problem. Odds are that combined problem falls into the 5% that neither of them solved on their own.

It is the responsibility of enterprise architecture to start taking the broader perspective on these items. The bulk of the projects today are still going to be attacking point problems. While those still need to be solved, we need to ensure that these things fit into a broader context. I’m willing to bet that most service developers have never given thought to whether the service messages could be incorporated into a data warehouse. It’s just as unlikely that they’re publishing events and exposing some potentially useful information for other systems, even where their particular solution didn’t require any events. So, to answer the question of whether SOA will be a term we use 5 years from now, I certainly hope we’re still using it, however, I hope that it’s not still as some standalone initiative distinct from other enterprise-scoped efforts. It all does need to fall under the umbrella of enterprise architecture, but that doesn’t mean that the EA still doesn’t need to be talking about services, events, processes, etc.

Update: I redid the picture to make it clearer (hopefully).

Most popular posts to date

Most popular posts to date

It’s funny how these syndicated feeds can be just like syndicated TV. I’ve decided to leverage Google Analytics and create a post with links to the most popular entries since January 2006. My blog isn’t really a diary of activities, but a collection of opinions and advice that hopefully remain relevant. While the occasional Google search will lead you to find many of these, many of these items have long since dropped off the normal RSS feed. So, much like the long-running TV shows like to clip together a “best of” show, here’s my “best of” entry according to Google Analytics.

- Barriers to SOA Adoption: This was originally posted on May 4, 2007, and was in response to a ZapThink ZapFlash on the subject.

- Reusing reuse…: This was originally posted on August 30, 2006, and discusses how SOA should not be sold purely as a means to achieve reuse.

- Service Taxonomy: This was originally posted on December 18, 2006 and was my 100th post. It discusses the importance and challenges of developing a service taxonomy.

- Is the SOA Suite good or bad? This was originally posted on March 15, 2007 and stresses that whatever infrastructure you select (suite or best-of-breed), the important factor is that it fit within a vendor-independent target architecture.

- Well defined interfaces: This post is the oldest one on the list, from February 24, 2006. It discusses what I believe is the important factor in creating a well-defined interface.

- Uptake of Complex Event Processing (CEP): This post from February 19, 2007 discusses my thoughts on the pace that major enterprises will take up CEP technologies and certainly raised some interesting debate from some CEP vendors.

- Master Metadata/Policy Management: This post from March 26, 2007 discusses the increasing problem of managing policies and metadata, and the number of metadata repositories than may exist in an enterprise.

- The Power of the Feedback Loop: This post from January 5, 2007 was one of my favorites. I think it’s the first time that a cow-powered dairy farm was compared to enterprise IT.

- The expanding world of the “repistry”: This post from August 25, 2006 discusses registries, repositories, CMDBs and the like.

- Preparing the IT Organization for SOA: This is a June 20, 2006 response to a question posted by Brenda Michelson on her eBizQ blog, which was encouraging a discussion around Business Driven Architecture.

- SOA Maturity Model: This post on February 15, 2007 opened up a short-lived debate on maturity models, but this is certainly a topic of interested to many enterprises.

- SOA and Virtualization: This post from December 11, 2006 tried to give some ideas on where there was a connection between SOA and virtualization technologies. It’s surprising to me that this post is in the top 5, because you’d think the two would be an apples and oranges type of discussion.

- Top-Down, Bottom-Up, Middle-Out, Outside-In, Chicken, Egg, whatever: Probably one of the longest titles I’ve had, this post from June 6, 2006 discusses the many different ways that SOA and BPM can be approached, ultimately stating that the two are inseparable.

- Converging in the middle: This post from October 26, 2006 discusses my whole take on the “in the middle” capabilities that may be needed as part of SOA adoption along with a view of how the different vendors are coming at it, whether through an ESB, an appliance, EAI, BPM, WSM, etc. I gave a talk on this subject at Catalyst 2006, and it’s nice to see that the topic is still appealing to many.

- SOA and EA… This post on November 6, 2006 discussed the perceived differences between traditional EA practitioners and SOA adoption efforts.

Hopefully, you’ll give some of these older items a read. Just as I encouraged in my feedback loop post, I do leverage Google Analytics to see what people are reading, and to see what items have staying power. There’s always a spike when an entry is first posted (e.g. my iPhone review), and links from other sites always boost things up. Once a post has been up for a month, it’s good to go back and see what people are still finding through searches, etc.

Widgets, Gadgets, Mashups, and Composite Applications

Widgets, Gadgets, Mashups, and Composite Applications

In the recently released SOA Insights Podcast, Dana and guests discussed the wacky world of mashups and composite applications. The panelists all agreed that mashups and composite applications are effectively the same thing, however, they did state that mashups tend to be associated with web-based presentation more so than composite applications. The debate moved into a discussion of SaaS providers (e.g. Salesforce.com) and aggregators like StrikeIron. Google apps was also thrown into the mix, however, I don’t consider that a mashup or a composite application. Google Calendar or Google Spreadsheet are really just hosted versions of traditional desktop applications. Given that the conversation went this direction, it surprised me that the discussion did not go down into the area of Widgets and Gadgets, depending on which operating system you like.

Before we go there, let’s first look features of mashups. The most familiar notion of a mashup is a geographic overlay of some information on a Google map. This is possible through the availability of data as XML from simple HTTP calls, the scriptability of a web-based presentation component, such as Google maps, and the use of AJAX technologies to tie the two together. How does this relate to SOA? The most direct connection is in the first item: data services making data available as XML over HTTP. Without this, everything needs to move to the server side where data access frameworks like ADO.NET, Hibernate, etc. can be leveraged. The other half of this is the presentation tier, where the combination of JavaScript and CSS now allows HTML-based components to effectively expose an API that can be executed within a browser. This is in contrast to Portal technology, which is still a predominantly server-side technology. Effectively, JavaScript and CSS now allow a component-based model for web-based application development.

To make this more applicable in the corporate world, we need to look at the corporate problems. Many enterprise IT department are not creating applications for an end user on the internet, but rather for their own corporate employees. Frequently, these applications are used for simple tasks (think data entry) associated with the execution of a business process. Unfortunately, those applications take time to run. If they’re desktop applications, there’s probably a lot of bloat in them and they take a lot of time to startup. On the consumer side of things, I’ll use Quicken as an example. I may only need to enter one or two transactions, but I used to have to start the whole bloated application with all of its features to do so. Something that should take 30 seconds winds up taking 2-3 minutes.

Now, let’s enter the world of Widgets and Gadgets. It really started with a program called Konfabulator (which was eventually acquired by Yahoo), continued with Apple’s Dashboard, and most recently was continued with Microsoft Vista’s dashboard. Effectively, these platforms leverage DHTML, JavaScript, CSS, and if necessary, some platform specific APIs (mainly for saving preferences, but can be used to access local desktop resources), to present web-based applications that are very narrow in function. For example, the latest release of Quicken for the Mac included a Dashboard Widget that allows data entry. I no longer have to start all of Quicken simply to enter a transaction. This results in increased efficiencies. Because these Widgets and Gadgets are built using DHTML, JavaScript, and CSS, they can pull in data on the fly via XML over HTTP, instead of having the user navigate through endless pages to get to what they need to do. Furthermore, the platform integration allows data to be carried in to the widget and be “mashed” on the fly.

I believe these technologies have the most potential within the enterprise. I can’t drag an Word document into a browser window normally, but I can drag it onto on Apple Dashboard Widget (I don’t know about Vista Gadgets). Secondly, their lightweight nature and immediate access makes them extremely well-suited for workflow tasks. Theoretically, the widget itself could be embedded in a task notification message. The task manager for the user (which could be a widget/gadget as well, or it could be something like Outlook) would leverage the native HTML/CSS/JavaScript engine to generate the UI on the fly, pull in the data via XML/HTTP and allow the user to directly execute the task without all of the overhead of launching applications and navigating through web pages.

There are certainly still challenges that exist before this becomes mainstream, security being the largest, along with the risk of badly coded widgets consuming resources. If you’ve ever tried to do some AJAX programming and used timers, you’ll know that it’s easy to make a mistake and wind up with a ton of background HTTP calls trying to get XML data that just grinds the system to a halt. This is just the normal maturity curve for technology usage, however. As someone with interest in user productivity and efficiency, I’m excited about the opportunities that Widgets and Gadgets may provide for all of us, both corporate user and home user, in the future.