Archive for the ‘CEP’ Category

Making Internal Activity Streams like Tibbr Valuable

Making Internal Activity Streams like Tibbr Valuable

Rob Koplowitz of Forrester recently posted, Why Tibbr Matters. He provided some examples of where an activity stream across a network like Tibbr could add value, and some examples where it couldn’t. I responded with a comment and I wanted to elaborate on my comments here.

Activity streams tied to your company that are available through tools like Chatter, Yammer, and Tibbr have potential for adding value, but there’s some big barriers that must be overcome. In my experience, I’ve used email, Sharepoint and other other internal portals, and Yammer inside of a corporate setting, and there’s two simple objectives that these tools should have, at a minimum:

- Moving information from the privileged few to a broader audience.

- Making new information available that previously wasn’t.

On the first item, a challenge that probably every organization has is getting the information to the right people. The information exists, but it only spreads through word of mouth or to people that the information holders think need to be aware of it. The twitter model is the right approach for addressing this, by allowing people to follow people or topics of interest (either via saved keyword searches or hashtags), rather than having to wait until it is explicitly sent to them. In order for this model to work, however, all information must be public. As soon as private, directed messages come into play, that information is now hidden. I don’t see this as the bigger of the two challenges, however, as at least the information exists, it’s just not getting everywhere it needs to go.

The second item is the greater challenge. If there is information that simply isn’t being communicated, there is no tool that is going to magically make that information appear. The more information sources you have participating in the network, the greater potential you have for getting value out of that network. Why does everyone join Facebook? It’s the network with the greatest participation, and therefore, the greatest potential value. There’s a catch-22 here, because you want participants to get value quickly, so they stay in the network, but once you get over the hurdle, the growth will come. So how do we do this?

In a corporate setting, the participants are not just your employees. The participants must include your systems. This is why Tibbr is potentially in a good spot. Tibco’s background is not in collaboration or social media, it is in system integration. Unfortunately, all of the web-based request/response systems over the past decade have gotten us away from the asynchronous, event-based system design of the past. Even SOA tends to imply a request-response paradigm in most people minds, meaning I have to know what to ask for in advance. Both our systems and our people need to expose items of interest without any preconceived notion of who might be interested. Yes, we need to be cautious about signal to noise ratio, but I don’t think that problem is any different than trying to manage redundancy in an application or service portfolio. As part of your deployment process, get a list of the events/messages that are available, categorize them, and manage them effectively. If the Twitterverse can quickly come up with accepted hashtags, why can’t we do the same inside our corporate worlds?

Since I’ve previously asked for this year to be the year of the event, let’s do so in a way that allows these events to feed into our internal activity streams and social networking tools, and start getting real value out of these technologies.

Tibbr and Information in the Enterprise

Tibbr and Information in the Enterprise

Back in March of this year, I asked “Is Twitter the cloud bus?” While we haven’t quite gone there yet, Tibco has run with the idea of Twitter as an enterprise messaging bus and announced Tibbr. This is a positive step toward the enterprise figuring out how to leverage social computing technologies in the enterprise. While I think Tibco is on the right track with this, my pragmatist nature also sees that there’s a long way to go before these technologies achieve mainstream adoption.

The biggest challenge is creating the robust information pool. Today, the biggest complaint of newcomers to Twitter is finding information of value. It’s like walking into the largest social gathering you’ve ever seen and not knowing anyone. You can walk around and see if you overhear someone discussing something interesting, but that can be a daunting task. Luckily, however, there are millions of topics being discussed at any given time, so with the help of a search engine or some trusted parties, you can easily begin to build the network. In the enterprise, it’s not quite so easy.

When looking at information sharing, here are two key questions to consider:

- Are new people receiving information that they would not otherwise have seen, and are they contributing back to the conversation?

- Are the conversation groups the same, but the information content improved in either its relevance, timeliness, quality, preservation, or robustness?

If the answer to both of those is “no”, then all you’ve done is create a new channel for an existing audience containing information that was already being shared between them. Your goals must be to enable one or more of the following:

- Getting existing information to/from new parties

- Getting new information to/from existing parties

- Delivering more robust/higher quality information to/from existing parties

- Delivering information in a more timely/appropriate manner to existing parties

- Making information more accessible (e.g. search friendly)

The challenge in achieving these goals via social networking tools begins with information sources. If you are an organization with 10,000 employees, only a small percentage of those employees will be early adopters. Strictly for illustrative purposes, let’s use IT department size as a reasonable guess to the number of early adopters. In reality, a lot of people IT will jump on it, as will a smaller percentage of employees outside of IT. A large IT department for a 10,000 person company would be 5%, so we’re looking at 500 people participating on the social network. Can you see the challenge? Are these 500 people merely going to extend the conversations they’re having with the same old people, or is the content going to meet the above goals?

Now comes along Tibbr, but does the inclusion of applications as sources of information improve anything? If anything, the way we’ve approached application architecture is even worse than dealing with people! Applications typically only share information when explicitly required by some other application. How many applications in your enterprise routinely post general events, without concern for who may be listening for them? Putting Tibbr or any other message bus in place is only going to be as valuable as the information that’s placed on the bus and most applications have been designed to keep information within its boundaries unless required.

So, to be successful, there’s really one key thing that has to happen:

Both people and applications must move from a “share when asked” mentality to a “share by default” mentality.

When I began this blog, I wasn’t directing the conversation at anyone in particular. Rather, I made the information available to anyone who may find it valuable. That’s the mentality we need in our organizations and the architecture we need in our applications. Events and messages can direct people to the appropriate gateway, with either direct access to the information, or instructions on how to obtain it if security is a concern. Today, all of that information is obscured at a minimum, and more likely locked down. Change your thinking and your architecture, and the stage is set for getting value from tools like Tibbr.

Oracle Business Activity Monitoring and Oracle Complex Event Processing

Oracle Business Activity Monitoring and Oracle Complex Event Processing

Full disclosure: I’m attending Oracle OpenWorld courtesy of Oracle.

I was late to this session as my panel preceeded it, and the questions continued for a good 30 minutes after the session ended. Thank you to all who attended, and the positive feedback was great.

The speaker went over the basics of Oracle’s CEP platform, introducing the query language they use for event streams (CQL). No surprises in terms of the approach, it looks like other CEP’s I’ve seen. They emphasized the role that Coherence plays in the scalability of the platform. I do like the use of Coherence as a common platform across all of their middleware products, it makes a lot of sense.

They went on to present a demo of CEP in action, where the CEP was processing location based events associated with emergency responders. One thing they didn’t call out is the need for some system to make the decision to actually publish events. In my opinion, this is one of the key things holding back adoption of things like CEP. The average app developer working on a web-based transactional system just doesn’t think about publishing events unless they have some concrete consumer of those events. Just as we may not know all consumers of a service, we may not know all consumers of events. Would the developer on this system really know to be publishing source locations associated with connectivity or message traffic in advance? Initially, we’ll need to simply leverage message flows that are already occurring, similar to an intrusion detection systems to extract information that should be events, but instead are embedded in some other message. This creates a need for standard headers and message bodies to allow the CEP engine to have something consistent to query against.

Consistent with my previous posts on CEP, it looks like great technology, but the definition of problems where it is best applied are still evolving and maturing.

Making events, EDA, CEP, and SOA interesting

Making events, EDA, CEP, and SOA interesting

I’m back from my vacation and have a few topics queued up for some blog entries. The first one that I wanted to cover was the topic of events and SOA. The relationship between EDA, CEP, and SOA is one that pops up on a regular basis, however, in my opinion, it still hasn’t reached a sustained level of interest. There was a big peak some time ago when Oracle and Gartner used the ill-fated moniker SOA 2.0 to represent the combination of SOA and EDA, but once the backlash died down, the discussion around events faded back into the background again. Thankfully, it hasn’t gone away completely. One of the more recent publications on the topic came from Rich Seeley with SearchWebServices.com in this article. He stated that, “The relationship of complex event processing (CEP) to service-oriented architecture (SOA) remains, in a word, complex.” Why is the case?

The article included quotes from Jason Bloomberg of ZapThink comparing EDA to SOA. While I usually agree with the ZapThink guys, I disagree with Jason’s quote that there is no particular reason to distinguish SOA from EDA. Jason points out that all service messages are essentially software events which “contain all the information you’d ever want about the behavior and state of your business.” At a technical level, there’s nothing wrong with Jason’s statement. Where there are differences, however, is in the intent of the message. A message associated with an interaction between a service consumer and a service provider is either a request for the provider to do something or a response from the provider back to that consumer. The fundamental difference, in my opinion, is that these messages are directed at a specific destination. While you can certainly intercept these messages and use them for other purposes (and I’d argue that doing for so for business intelligence analysis reasons is a good thing), there’s a risk involved in doing this because now there are unintended side effects. In contrast, events have no requirement to be directed at any particular destination, typically using a publish-subscribe approach for distribution.

Let me get back to the question of complexity. I will admit that discussions like paragraph above are part of the reason that people find EDA and CEP hard to grasp. Often times, discussion in the blogosphere will focus on areas of disagreement, losing sight of the areas of agreement. I’d argue that if you talked to Jason and myself on the relationship between SOA and EDA, 80% of what you’d hear would be consistent. So, just because Rich Seeley received a number of different takes on SOA, CEP, and EDA, doesn’t mean it’s complex.The right question we should be tackling is how to make events, EDA, and CEP more interesting, building on the natural relationship to SOA. As I’ve previously stated in my uptake of CEP post, I don’t think that most organizations are ready for CEP and EDA yet. The debates that are occurring in the blogosphere and press are being made by people that have a vested interest (typically vendors, but you could argue that niche analyst firms do as well) in creating “buzz” about the topic. As a corporate practitioner, I have no such vested interest except where it makes business sense for my employer. So, I need to ask the question on how to make CEP and EDA relevant (and interesting) to the business. The challenge with it, and why it was important that I wrote about my difference of opinion with Jason of ZapThink, is that events, on their own, don’t do anything. A service performs some function. It does something. The business can grasp this. An event is just a nugget of information. A collection of events presented to business stakeholders are not going to be very meaningful until you start doing something with them. As a comparison, let’s look at baseball. If you watch or listen to a baseball game, you’ll get a barrage of statistics. Are they useful? Some managers, like Tony LaRussa of my hometown St. Louis Cardinals, have always made extensive use of the data. Has it made him more successful? We’ll probably never know. We can certainly say that he’s been a successful manager, but can we tie it specifically to his use of event capture and analysis? There are probably other managers or baseball pundits that would argue that the cost of collection and analysis isn’t worth it.

The same thing holds true for EDA and CEP. There is a cost associated with the generation of events. There is a cost associated with the analysis of events. What’s missing is the benefit. To get this, we need to do analysis of the business and come up with suitable justification. For a domain such as risk analytics associated with securities trading, the justification is there. Complex analysis of the trading and news events occurring in real time can result in better timed market activities with millions of dollars in potential benefits. In other domains, it may not be as crystal clear. If an organization has a stated goal of better knowledge of their customers, it would seem that event capture and analysis could assist in this, but how do we quantify the potential benefits? Just as with SOA, I think a key to this is selecting an appropriate proof-of-concept and then pilot. Some event capture and analysis can be done without purchasing any new infrastructure. There’s nothing wrong with performing analysis on a week’s worth of data that takes another week to complete if the end result is valuable, business relevant information. As Jason suggests, you can use service messages as your starting point for analysis, so if you’ve got audit logs of them, you only then need an analysis engine. Every organization already has many of these, and I’m not talking about a BI system. I’m talking about employees. While we may not capture all of the correlations, most of us are pretty good at spotting trends. It’s simply a matter of having someone look at the information that’s already there. Guess what that activity is? It’s business analysis. Do the analysis, understand the business, create the business case, and go make things better.

External Events in Action

External Events in Action

I received a press release in email from Xignite entitled “Partnership Delivers Financial Professionals Responsiveness, Collaboration Via Timely Earnings Data.” In this release Xignite announced their partnership with Wall Street Horizon, a provider of earnings event and calendar information to the investment industry. Xignite will redistribute Horizon’s earnings and events calendar content as part of its street-event driven series on-demand financial web service.

While I normally don’t try to be a recycler of press releases from vendors, as I’d much rather comment on things more directly associated with work as a practicing architect, I’d be very happy to see more and more of these types of releases. Why? In the past, I’ve talked about the importance of events, such as this post. One of the challenges, however, is that I don’t really feel that there are good sources of events, especially ones that come in from outside of the enterprise (although there are times that I think that outside sources are more likely that internal sources…). Here’s a press release that shows that external sources are appearing and through partnerships, trying to increase their audience. It would be great if some of the industry consortiums for specific verticals would develop some standards in the event space.

Acronym Soup

Acronym Soup

The panel discussion I was involved with at The Open Group Enterprise Architecture Practitioner’s Conference went very well, at least in my opinion. We (myself, our moderator Dana Gardner, Beth Gold-Bernstein, Tony Baer, and Eric Knorr) covered a range of questions on the future of SOA, such as when will we know we’re there, will we still be discussing it 5 years from now or will it be subsumed by EA as a whole, etc.

In our preparations for the panel, one of the topics that was thrown out there was how SOA will play with BPM, EDA, BI, etc. I should point out that our prep call only set the basic framework of what would be discussed, we didn’t script anything. It was quite difficult biting my tongue on the prep call as I wanted to jump right into the debate. Anyway, because it didn’t get the depth of discussion that I was expecting, I thought I’d post some of my thoughts here.

I’ve previously posted on the integration between SOA, BPM, Workflow, and EDA, or probably better stated, services, processes, and events. There are people who will argue that EDA is simply part of SOA, I’m not one of them, but that’s not a debate I’m looking to have here. It’s hard to argue that there are natural connections between services, processes, and events. I just recently posted on BI and SOA. So, it’s time to try to bring all of these together. Let’s start with a picture:

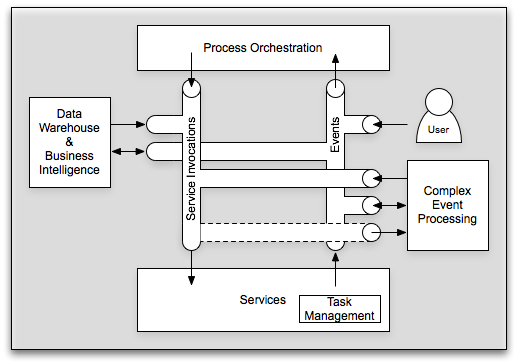

In its simplest form, I still like to begin with the three critical components: processes, services, and events. Services are explicitly invoked by sending a service invocation message. Processes are orchestrated through a sequence of events, whether human-generated or machine generated. Services can return responses, which in essence are a “special” event directed solely at the requestor, or they can publish events available for general listening. So, we’ve covered SOA, BPM, EDA, and workflow. To bring in the world of EDW (Enterprise Data Warehouse), BI (Business Intelligence), CEP (Complex Event Processing), and even BAM (Business Activity Monitoring, although not shown on the diagram), the key is using these messages for purposes other than which they were intended. CEP looks at all messages and is able to provide a mechanism for the creation of new events or service invocations based upon an analysis of the message flow. Likewise, take these same messages and let them flow into your data warehouse and allow your business intelligence to perform some complicated analytics on them. You can almost view CEP as a sort of analytical engine operating on a small window, while business intelligence can act as the analytical engine operating on a large window. Just with CEP, your EDW and BI system can (in addition to report) generate events and/or service invocations. Simply put, all of the technologies associated with all of these acronyms need to come together in a holistic vision. At the conference, Joe Hill from EDS pointed out that when many of these technologies solved 95% of the problem they were brought in for. Unfortunately, when your problem space is broadened to where it all needs to integrate, the laws of multiplication no longer apply. That is, if you have two solutions that solved 95% of their respective problems, they don’t solve 0.95 * 0.95 = 90.25% of the combined problem. Odds are that combined problem falls into the 5% that neither of them solved on their own.

It is the responsibility of enterprise architecture to start taking the broader perspective on these items. The bulk of the projects today are still going to be attacking point problems. While those still need to be solved, we need to ensure that these things fit into a broader context. I’m willing to bet that most service developers have never given thought to whether the service messages could be incorporated into a data warehouse. It’s just as unlikely that they’re publishing events and exposing some potentially useful information for other systems, even where their particular solution didn’t require any events. So, to answer the question of whether SOA will be a term we use 5 years from now, I certainly hope we’re still using it, however, I hope that it’s not still as some standalone initiative distinct from other enterprise-scoped efforts. It all does need to fall under the umbrella of enterprise architecture, but that doesn’t mean that the EA still doesn’t need to be talking about services, events, processes, etc.

Update: I redid the picture to make it clearer (hopefully).

External consumers and providers

External consumers and providers

James McGovern, in his links entry for April 11th posted this comment in regards to my entry on what SOA adoption actually means:

“A measurement that would be interesting is to ask enterprises how many services do you have that are consumed outside of your enterprise. The numbers would be dramatically lower…”

As I thought about this, it became more and more interesting. First, I definitely agree that the number of services is going to be dramatically lower, unless your company is already a service provider (think ASP), in which case, then it should constitute the majority of your service portfolio. What about other verticals, however? Certainly supply chain interactions will involve external entities. Truth be told, there’s lot of potential for interactions with partner companies. How many companies outsource payroll processing to ADP or someone else? I’d venture a guess that there are probably areas for commodity services in every vertical. Over time, things that once were competitive differentiators become commodities. Once that happens, a marketplace opens up for commodity providers that focus on operational excellence and low cost, and the companies that prefer to focus on customer service get rid of their homegrown infrastructure and leverage the commodity provider. Guess what, when that happens, the potential now exists for service interactions. I recently presented some introductory information on service concepts and described business services as services that both ones that represent the primary business functions as well as ones that support the primary business such as HR, payroll, etc. Technology clearly plays a big role in both.

You may be thinking, “No arguments on what you said, but James asked about services consumed by outsiders, not provided by outsiders.” Quite true, but again, I’d be willing to bet that the vast majority of these B2B interactions will require bi-directional communications. It may be the case that 90% of the time, the partner acts in the service provider role, but odds are that some of that processing will require them having the ability to make service calls back to you. At a minimum, some form of events should be flowing back into your infrastructure. The more information flow can be a circle, rather than a one-way line, the greater the potential for leveraging emerging technologies like CEP for continued innovation. If the information only flows one way, you severely restrict your ability to innovate based on that information.

Aside:James also posted some musings on Open Source and the possibilities of it playing a role in commodity vertical applications yesterday. If that happened, there would certainly have potential implications. It probably wouldn’t take long for someone to create a hosted solution for these open source offerings, again creating the potential for service interactions between the two companies.

The end result of my thinking on this is that if your thinking on SOA is constrained to inside your firewall, it won’t be very long at all before you need to extend that thinking, both as a consumer of services provided from the outside as well as a provider of services that will be consumed by the outside. Companies that make the claim that they’ve “adopted SOA” should have a view that encompasses all of it, regardless of whether their core business is being a service provider or not.

The management continuum

The management continuum

Mark Palmer of Apama continued his series of posts on myths around the EDA/CEP space, with number 3: BAM and BPM are Converging. Mark hit on a subject that I’ve spoken with clients about, however, I don’t believe that I’ve ever posted on it.

Mark’s premise is that it’s not BAM and BPM that are converging, it’s BAM and EDA. Converging probably isn’t the right word here, as it implies that the two will become one, which certainly isn’t the case. That wasn’t Mark’s point, either. His point was that BAM will leverage CEP and EDA. This, I completely agree with.

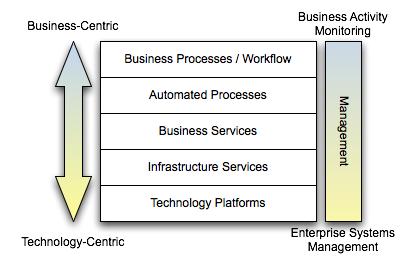

You can take a view on our solutions like the one below. At higher levels, the concepts we’re dealing with are more business-centric. At lower levels, the concepts are more technology-centric. Another way of looking at it is that at the higher levels, the products involved would be specific to the line of business/vertical we’re dealing with. At the lower levels, the products involved would be more generic, applicable to nearly any vertical. For example, an insurance provider may have things like quoting and underwriting at the top, but at the bottom, we’d have servers, switches, etc. Clearly, the use of servers are not specific to the insurance industry.

All of these platforms require some form of management and monitoring. At the lowest levels of the diagram, we’re interested in traditional Enterprise Systems Management (ESM). The systems would be getting data on CPU load, memory usage, etc. and technologies like SNMP would be involved. One could certainly argue that these ESM tools are very event-drvien. The collection of metrics and alerts is nearly always done asynchronously. If we move up the stack, we get to business activity monitoring. The interesting thing is that the fundamental architecture of what is needed does not change. Really, the only thing that changes is the semantics of the information that needs to get pushed out. Rather than pushing CPU load, I may be pushing out the number of auto insurance quotes requested and processed. This is where Mark is right on the button. If the underlying systems are pushing out events, whether at a technical level or at a business level, there’s no reason why CEP can’t be applied to that stream to deliver back valuable information to the enterprise, or even better, coming full circle and invoking some automated process to take action.

I think that the most important takeaway on this is that you have to be thinking from an architectural standpoint as you build these things out. This isn’t about running out and buying a BAM tool, a BPM tool, a CEP tool, or anything else. What metrics are important? How will the metrics be collected? How do you want to perform analytics (is static analysis against a centralized store enough, or do you need dynamic analysis in realtime driven by changing business rules)? What do you want to do with the results of that analysis? Establishing a management architecture will help you make the right decisions on what products you need to support it.

EDA begins with events

EDA begins with events

Joe McKendrick asks, “Is EDA the ‘new’ SOA?” First, I’ll agree with Brenda Michelson that EDA is an architecture that can effectively work in conjunction with SOA. While others out there view EDA as part of SOA, I think a better way of viewing it would be that services and events must both be part of your technology architecture.

The point I really want to make however, which expounds on my previous post, is that I simply think event-oriented thinking is the exception, rather than the norm for most businesses. I’m not speaking about events in the technical sense, but rather, in the business sense. What businesses are truly event driven, requiring rapid response to change? Certainly, the airlines do, as evidenced by JetBlue’s recent difficulties. There are some financial trading sectors that must operate in real-time, as well. What about your average retail-focused company, however? Retail thinking seems to be all about service-based thinking. While you may do some cold calls, largely, sales happen when someone walks into the store, goes to the website, or calls on the phone. It’s a service-based approach. They ask, you sell. What are the events that should be monitored that would trigger a change in the business? For companies that are not inherently event-driven, the appropriate use of events are for collecting information and spotting trends. Online shopping can be far more informative for a company than brick-and-mortar shopping because you’ve got the clickstream trail. Even if I don’t buy something, the company knows what I entered in the search box, and what products I looked at. If I walk into Home Depot and don’t purchase anything, is there any record of why I came into the store that day?

Again, how do we begin to go down the direction of EDA? Let’s look at an event-driven system. The October 2006 issue of Business 2.0 had a feature on Steve Sanghi, CEO of Microchip Technology. The article describes how he turned around Microchip by focusing on commodity processors. As an example, the articles states that Intel’s automotive-chip division was pushing for “a single microprocessor near the engine block to control the vehicle’s subsystems and accessories.” Microchip’s approach was “to sprinkle simpler, cheaper, lower-power chips throughout the vehicle.” Guess what, today’s cars have about 30 micro-controllers.

So, what this says is that the appropriate event-based architecture is to have many, smaller points of control that can emit information about the overall system. This is the way that many systems management products work today- think SNMP. To be appropriate for the business, however, this approach needs to be generating events at the business level. Look at the applications in your enterprise’s portfolio and see how many of them actually publish any sort of data on how it is being used, even if it’s not in real time. We need to begin instrumenting our systems and exposing this information for other purposes. Most applications are like the checkout counter at Home Depot. If I buy something, it records it. If I don’t buy something and just exit the store, what valuable information has been missed that could improve things the next time I visit?

I’d love to see events become more mainstream, and I fully believe it will happen. I certainly won’t argue that event-driven systems can be more loosely coupled, however, I’ll also add that the events we’re talking about then are not necessarily the same thing as business events. Many of those things will never be exposed outside of IT, nor should they be. It’s the proper application of business events that will drive companies opening up their wallets to purchase new infrastructure built around that concept.

Uptake of Complex Event Processing (CEP)

Uptake of Complex Event Processing (CEP)

I’m seeing more and more articles about complex event processing (CEP) these days. If you’ve followed by blog, you’ll know that I’m a big fan of events, so I try to read these when they come across my news reader. One of the challenges I see, however, is that event-driven thinking is not necessarily the norm for businesses. Yes, insurance companies may deal with disaster events, and financial services companies may deal with “life” events like weddings, births, kids going to college, but largely, the view is very service-based. It is reactionary in nature. You ask me for something, and I give it to you.

This poses a challenge for event processing to gain mindshare. While event processing is certainly the norm in user interface processing and embedded systems, it’s not in your typical business IT. Ask yourself- if you were to install a CEP system in your enterprise today, what events would it see?

The starting point that I see for events should merely be publication. Forget about doing anything but collecting statistics at the beginning. Since events don’t align with how we’re normally thinking, perhaps we should let them show us how we should be thinking. This gets into the domain of business intelligence. The beauty of events, however, is that they can make the intent explicit, rather than implicit. If I’m only performing analysis based on database changes, am I seeing the right thing, or am I only seeing symptoms of the event? Not all events may result in a database change, and that’s where the important correlations may lie. If some companies shows up on page one of the Wall Street Journal, it could result in increased trading activity for that company. My databases may record the increased trading, I may not have a record of the triggering event- the news story.

Humans are very good an inferring relationships between events, sometimes better than we think. But without any events, how can we infer any relationships? We don’t want to overwhelm the network with XML messages that no one ever looks at, but we shouldn’t be at the opposite extreme either. Starting with new applications, I’d make sure that those systems are publishing some key events based upon their functionality. Now, we can start doing some analysis and looking for correlations. We then start to encourage event-driven thinking about the business, and as a result, have now created the potential for CEP systems to be used appropriately.

As an example of how far we still have to go, let’s look at Amazon. They certainly leverage business intelligence extremely well. Unfortunately, as far as I can tell, it’s largely based upon tracking the event that impacts their bottom line directly- purchasing. If I were them, I’d be looking at wish list activity much more strongly. Interestingly, my wife gets more recommendations on technical books that I do. Why? Because she’s purchasing them as gifts for me. I put them on my wish list, she buys them. Because they’re looking at the wrong event, they now make an inference that she’s interested in them when she isn’t. I am. They need to track the event of me adding it to my wish list, along with someone purchasing it for me, and then turn around and make recommendations back to me. Of course, that’s part of the challenge, though. There is simply a ton of information that you could collect, and if you collect the wrong stuff, you can waste a lot of time. Start with a small set of information that you know is important to your business and build out from there.