Archive for the ‘Management’ Category

Virtualization Podcast

Virtualization Podcast

Dana Gardner has posted the latest edition of his Briefings Direct SOA Insights podcast series, which is a discussion on virtualization and IT operations efficiency. Besides myself, we had a big group for this discussion including Jim Kobielus, Neil Macehiter, Dan Kusnetzky, Brad Shimmin, JP Morgenthal, and Tony Baer.

I find both of these topics very interesting and was glad to be part of the discussion. Operational management is an area ripe for improvement in many organizations, and it’s something that doesn’t get a lot of attention, because the impact to the business is largely ignored unless something breaks. Somehow, we need to move from a firefighting mode to a value-add mode where it’s more about the collection of data during normal operations to assist in the continued success of the business.

You can read a full transcript of the discussion here.

Working within the horizontal silo

Working within the horizontal silo

It’s about 6:20 in the morning, and I’m presently on a 5 hour bus ride with a bunch of IT staff headed toward some facilities of my employer to learn more about their business. Before I get into the topic for this entry, I certainly want to give kudos to my employer for setting up this opportunity for IT to learn more about the business. With the long bus ride, I’m trying to get caught up on some podcasts. I’m presently listening to a discussion on SOA Management with Jason Bloomberg of ZapThink and Dana Gardner of Interarbor Solutions.

In his intro, Dana Gardner used the oft-mentioned and maligned term, silo. For whatever reason, it suddenly occurred to me that we always work within silos. Organizational structures create silos. Projects create silos. Physical locations can create silos. So, perhaps the right discussion shouldn’t be how to eliminate silos, but rather, how to choose silos correctly, work appropriately within them, and know when to redefine them. I’ve commented a bit on how to organize things, specifically in my post on horizontal and vertical thinking. For this entry, I’d like to discuss the appropriate way to operate within a horizontal silo.

A horizontal silo is one where the services being provided from that silo have broad applicability. A very easy example most organizations should be familiar with is servers. While there are multiple products involved based on the type of processing (e.g. big number crunching versus simple transactional web forms), you’d be hard pressed to justify having every project select its own servers. This area isn’t without change, one only needs to look at the space of VMWare and its competitors to see this.

In many IT organizations, when a centralized group is established, the focus can often be on cost containment or reduction. For many horizontal domains, this makes a lot of sense, but there’s a risk associated with this. When a group focuses on cost reduction, this is frequently done at the expense of the customer. Cost reduction typically means more standardization and less customer choice. To an extreme, the service team can wind up getting too focused on their own internal processes and expenses and completely forget about the customer. This is neither good nor bad, only something that must be decided by the organization. I can do a lot of my household shopping at either Target or Walmart. I’m probably going to have a better experience at Target, however, it will probably cost more than Walmart. What’s most important to you?

Within the enterprise, I’ve seen this dilemma occur in areas where some specialized technical knowledge is needed, but where the services themselves ore not standardized/commoditized. The most frequent example is that of Data Services. Many organizations create a centralized data services group to retain oversight over all SQL written. The problem with this is that while we may have a team that can write great SQL, we may not have a team that really understands what information the business needs from those queries. The team may try to minimize the amount of services available rather than give their customers what they need. What’s the right answer?

When establishing a service team, you need to think about your engagement model. Are you going to provide an outsourcing model, or a consulting model? In an outsourcing model, the service is largely provided without customer input. Customers simply pick from a list of choices and the burden is completely on the service team to provide. Getting a server or a network connection may be much better suited for this category. In a consulting model, there’s a recognition that some amount of input from the customer is still needed to be successful. I can’t create a good data service without knowledge of the customer’s information needs. A consulting model is going to be more expensive than an outsourcing model. If an organization is judging the success of this team based on cost, however, that’s a problem. This is an issue with the success criteria, however. First and foremost, we want to be sure that the right solution gets built. When the services being provided are provided in a cookie-cutter approach, the emphasis can be on cost. When each service requires customization, the focus needs to shift a little bit more toward providing the right solution, with cost as a secondary concern. It may not be about creating reusable services, but usable services that are less likely to cause problems than a custom-built solution by staff without the proper expertise in a given area.

Service focus or product focus?

Service focus or product focus?

Neil Ward-Dutton of Macehiter Ward-Dutton had a post recently entitled “Rethinking IT projects? Think service, not product, focus.” He called out a frequent mantra of mine, which is that we need to get away from the typical project-based mentality and toward a product-based mentality. While Neil agrees that we need to get away from the project-based mentality, he questioned whether a product-based mentality is the right approach either. Instead, he advocates a service-based focus, where “service” in this case is more akin to its use in ITIL and IT Service Management (my interpretation, not his).

If you dig into his post, and if you’ve been following my own posts, I think you’ll find that we’re essentially saying the same thing, but perhaps using different terminology. Neil states:

The trouble is that taking too literal a view of IT delivery through the lens of product management can prevent you from reflecting reality the way that “customers” (regular business people in your organisation, and quite possibly those external customers that ultimately pay all the salaries) see it.

I couldn’t agree more. I’m a huge advocate for usability, user centered design, etc., so anything that involves assumptions on what the user needs rather than going out and actually involving the customer is definitely red flag in my book. Personally, I don’t even like to use the term customer when talking about IT delivering solutions to the business where the business is the end user. The business and IT should be partners in the effort, not a customer/supplier relationship.

Futher in the post, Neil makes the comment:

If you take too much of a product management centric view, the danger is that you focus all your energy creating the right kind of development and deployment capabilities, without thinking of the broader service experience that customers need and expect over the lifecycle of a long-term commitment. IT operations is where the rubber meets the road, and where customer expectations are met or dashed. Too simplistic a focus on product-style management for IT delivery perpetuates the development-operations divide and squanders a great opportunity.

Personally, I don’t view what Neil describes as good product management. If your definition of product management is simply a chained sequence of development activities, you’re missing the boat. Only through excellent operational management and customer interaction can you make appropriate decisions on what those subsequent activities should be. It’s not all about development. I think this point was made very well by Dr. Paul Brown in his book, “Succeeding with SOA: Realizing Business Value Through Total Architecture” which I was fortunate enough to review with Dana Gardner. He made very clear that projects are only successful when they deliver the promised business value. This value doesn’t come when the software is deployed into production. It comes after it. It may be one month, it may be one year. I’ve been involved with a number of projects that defined the project mission statement in terms of “delivering something by such-and-such date for $$$.” While meetings dates and budget are very important, they don’t define success. Delivering business value defines success, and the effort needs to continue to ensure that happens even if there are no development activities occurring. This brings in Neil’s notion of service, and it is absolutely a critical component to success.

Book Review

Book Review

I had the opportunity to do a review of a book, and then discuss it in a podcast with the author and Dana Gardner. The book is entitled, “Succeeding with SOA: Realizing Business Value Through Total Architecture” and is written by Dr. Paul Brown of TIBCO Software.

You can view a transcript here, listen to the podcast here, or I’ve also added it as an enclosure on this entry.

I enjoyed this book quite a bit, and have to point out that it’s not your typical technology-focused SOA book. It presents many of the cultural and organization aspects behind SOA, and does a pretty good job. It tries to offer guidance that works within the typical project-based structures of many IT organizations. While I personally would like to see some of these project-based cultures broken down, this book offers practical advice that can be used today and eventually lead to the cultural changes necessary. Overall, I recommend the book. I found myself thinking, “Boy, if I were writing a book on SOA, these are things that I’d want to cover.” Give the podcast a listen, and check out the book if you’re interested.

Full disclosure: Outside of receiving a copy of the book to review, I did not receive any payment or other compensation for doing the review or participating in the podcast.

Open Group EA 2007: Andres Carvallo

Open Group EA 2007: Andres Carvallo

Andres Carvallo is the CIO for Austin Energy. He was just speaking on how the Internet has changed the power industry. He brought up the point that we’ve all experienced, where we must call our local power company to tell them that the power is out. Take this in contrast to the things you can do with package delivery via the Internet, and it shows how the Internet age is changing customer expectations. While he didn’t go into this, my first reaction to this was that IT is much like the power company. It’s all too often that we only know a system is down because an end user has told us so.

This leads to discussion of something that is all too frequently overlooked, which is the management of our solutions. Visibility into what’s going on is all too often an afterthought. If you exclusively focus on outages, you’re missing the point. Yes, we do want to know when the .001% of downtime occurs. What makes things more important, however, is an understanding of what’s going on the other 99.999% of the time. It’s better to refer this as visibility rather than monitoring, because monitoring leads to narrow thinking around outages, rather than on the broader information set.

Keeping with the theme of the power industry, clearly, Austin Energy needs to deal with the varying demands of the consumers of their product. That may range from some of the major technology players in the Austin area versus your typical residential customer. Certainly, all consumers are not created equal. Think about the management infrastructure that must be in place to understand these different consumers. Do you have the same level of management in your IT solutions to understand different consumers of your services?

This is a very interesting discussion, especially given today’s context of HP’s acquisition of Opsware (InfoWorld report, commentary/analysis from Dana Gardner and Tony Baer).

Another great Technometria

Another great Technometria

This time the conversation is with Scott Berkun, author of “The Myths of Innovation.” To give you an idea on how entertaining this Technometria conversation was, Phil Windley’s two co-hosts, Ben Galbraith and Scott Lemon, both went online to Amazon during the call and purchased Scott’s book. The discussion focused on the human element of software development and things that contribute to success with innovation. Here’s the link to the IT Conversations page for it.

More from the Technology Garden

More from the Technology Garden

I previously posted some thoughts on the new book from the Neils at MacehiterWard-Dutton along with Jon Collins and Dale Vile from Freeform Dynamics called “The Technology Garden: Cultivating Sustainable IT-Business Alignment.” I finally got a chance to pick it up again, and I thoroughly recommend chapter 8, “Foster relationship with key IT suppliers” for anyone who has an involvement in vendor decisions. Working with vendors is an art, and it’s not just the department head who needs to do it. They give some practical guidance on how to determine who your strategic vendors are, and who the vendors are that you simply deal with on a transaction by transaction basis. In addition to this, they also give prescriptive guidance on working with them, and the art of the win-win solution.

In addition to being of value to vendor relations, anyone adopting SOA should also understand this chapter. Why? Because if you’re a service provider, a lot of the same conflicting pressures occur between consumer and a provider. Strive for the win-win situation, and you’ll be better off. It’s not easy to do, especially when you have multiple consumers each with their own priorities.

Will your culture allow an impact player?

Will your culture allow an impact player?

Jordan Haberfield of Excel Partner recently posted a blog titled “Enterprise Architects as ‘Impact Players'”. I’ve enjoyed Jordan’s blog as it discusses EA from a different perspective, one of talent and corporate culture, rather than the technical aspects. I’ve always found the human and social aspects of things very interesting.

Anyway, he provides an excerpt from a book he read called “Don’t Send a Resume: And Other Contrarian Rules To Help Land A Great Job” by Jeffery Fox which introduces the role of the impact player. Here’s the quote:

“There is always a job in a good organization for an impact player. An impact player is someone who can quickly improve the economics of a company. An impact player is someone who can bring in customers, energize the sales force, restructure an under-performing department, speed up the innovation process, solve the late shipment problem, or physically move the manufacturing facility to a lower cost area. An impact player also is someone who will do the necessary but noxious tasks no one else wants to do. An impact player is someone who will get their hands dirty, pick up a shovel and start shoveling, open the store early and close late, deliver product on their way home, deal tirelessly with irate customers and make a service call on Christmas Eve. Good executives in good companies want to hire impact players.”

Jordan goes on to state that “Enterprise Architects are in a position to become that impact player and make a significant difference.” It’s probably better stated that Enterprise Architects should be in a position to do this. Whether they are or not is highly dependent on the corporate culture.

I’ve spoken with a number of colleagues in the St. Louis area, and it’s my understanding that many of the organizations here where I reside don’t even have a formal EA group. Why is this important? It’s important because I believe that an impact player often has to transcend boundaries and operate outside of the constraints that a particular job description may imply. If an organization doesn’t have an Enterprise Architecture discipline, someone needs to go outside of the box of their current job description and start doing EA. If the corporate culture is resistant to people operating outside of their formal job description, that impact player is going to need some very thick skin.

A great example is from two years ago when Jason and Ron at ZapThink emphasized the need for the SOA Champion to guide an organization through SOA adoption. SOA is not about buying an ESB, Registry/Repository, or any other tool. For the bulk of companies that comprise the “status quo” of Service Averse Architecture, it’s about a fundamental change to the way IT solutions are delivered which can cover organizational change, process change, and technology change. What organization has a formal role established for this position? Probably not many. It requires someone to be an impact player, think outside the box, transcend boundaries, and pave the path for the new way of doing things.

Some companies encourage individuals to think outside of the box and outside of their formally stated responsibilities, and it’s probably one that should be added to the litmus test for a company likely to be successful with innovation and strategic initiatives. After all, the degree to which a company does this is a matter of trust. Unfortunately, it’s far easier to break down that trust that it is to build it up. For those of you dealing with that, follow this link to another excellent book that I’ve read.

In summary, the original quote by Dr. Fox states that “There is always a job in a good organization for an impact player.” The key part of this is “good organization.” If you are exhibiting the qualities of an impact player, but you’re struggling to be successful, take a look at the organization’s culture and see whether it is a “good organization” or not. If you’re looking for a good organization, you may need to look for the foot-in-the-door opportunity, because often times, it takes an outsider to come in and recognize the areas for potential impact, so you’re not going to find it on Monster or Dice.

ATOM/LDAP Mashup

ATOM/LDAP Mashup

James Governor of RedMonk has a post that he claims is the “Most exciting idea in ages: an ATOM/LDAP mashup.“ It’s actually a very interesting idea to me, especially because he suggests applying it to the management domain. I’ve previously posted (here and here) about the convergence of metadata, and how I’ve seen parallels between Service Repository, Configuration Management databases, Asset Management systems, and potentially even LDAP. So, if we’re doing an ATOM/LDAP mashup, is ATOM equally applicable to other items in the metadata management domain. I suspect that it will be. Nearly all management specs I’ve seen have a resource-oriented view, and it would seem that the combination of ATOM and REST could be a very good fit on top of this. Hopefully James will keep us all updated on the progress of this exciting idea.

SOA requires trust

SOA requires trust

James McGovern made the following comments regarding my Service Adverse Architecture post:

Todd Biske is right on the money by echoing the fact that companies who have mastery of SOA also have forward thinking management. I wonder if him and Joe McKendrick would define a litmus test so that others can characterize their own enterprise in terms of the management team?

While I’ll have to give some thought to the entire litmus test, the first thing that immediately came to mind was trust, and it’s not limited to management. If I’m a project manager, I want to have as much control over my own destiny as possible. When I now become dependent on other groups and other projects outside of my control and my management to be successful, that’s a big leap of faith. Unfortunately, it’s part of human nature to remember the one project where a team had problems rather than on the times the team was successful. As a result, parties may go into the relationship with the expectation that someone is going to screw up, spend all their time looking for it ready to point blame, and as a result, that’s exactly what happens. If the groups instead focused on what is necessary to be successful, they’d probably be successful.

We live in a culture where making a mistake is unacceptable. One only needs to look at the current political process to understand how much our culture is based on avoiding failure rather than achieving success. A legislator is not allowed to change a stance on any issue, even though we as individuals do it all the time because we learn more as we go along. Cultures that are based on avoiding failure are, in general, going to have a difficult time doing more than maintaining the status quo. Innovating and advancing means taking risks, and when you take risks, some of them don’t pan out. Teams should not be penalized when they don’t pan out, unless it’s clear that they made very bad decisions based upon the information available at that time. I can look back at many decisions I’ve made in the past and recognize that some of my assumptions didn’t come true. As long as I’ve learned from that and don’t repeat those same decisions, there should be no penalty associated with it.

I suspect that organizations that are more forward-thinking have a greater level of trust within the organization. There is more collaboration than competition, and people all understand that everyone in the organization has the best interests of the organization as a whole in mind, not the best interests of themselves, their team, or their manager. Furthermore, management works effectively with the individuals in the trenches to help them understand what levels of risk the organization will tolerate and what the boundaries for innovation are within each group in the organization (essentially around roles and responsibilities). I’d much rather have an application developer creating innovative applications rather than trying to leverage a new Java framework. At the same time, the team responsible for development frameworks needs to be open to receiving input from the application developer. If they have mutual trust, the development framework team will be open to hearing about new alternatives, and the application developer will not throw a fit if the development framework team decides that it’s not in the best interests of the company to leverage the new framework at this time.

SOA will create more moving parts associated with the delivery of IT solutions. More moving parts means more ownership and hence, more interaction among teams. If we don’t trust each other, the chances of success are greatly reduced. That being said, trust must be earned and maintained, it can not be established by edict. Service providers must do all they can to build trust. Management must ensure that the organization takes an “innocent until proven guilty” approach, rather than the opposite, with actions that back it up.

The Pace of Change in the IT/Business Relationship

The Pace of Change in the IT/Business Relationship

I’m currently reading The Technology Garden: Cultivating Sustainable IT-Business Alignment by the Neils from Macehiter Ward-Dutton along with Jon Collins and Dale Vile of Freeform Dynamics. In the spirit of full disclosure, the publicist sent me a free advance copy of the book, as Amazon reports its availability as June 11th. I’m about halfway through it, and it’s been a pleasant read. Chapters four and five have particularly caught my attention. They are titled, “Create a common language” and “Establish a peer relationship between business and IT.” The common language chapter puts the onus on IT to learn the language of the business. While they also state that no competent business executive should be technology-ignorant (my words, not theirs), the bulk of the burden is on the technology staff. In the next chapter, it begins with a discussion on how many IT groups play a supplier role, and how that simply isn’t good enough these days. While service delivery and management is very important for building trust, it’s not sufficient. They state:

Suppliers, by definition, do what they’re told. The customer is always right! The parameters of service delivery are defined by the ‘customer,’ and thereafter the supplier delivers, in response to requests, in the context of those parameters (you can think of these as ‘contracts’ and ‘service-level agreements’).

When I read this, it occurred to me that as an industry, we really haven’t made much progress on the whole concept of IT and business as peers. I’ve mentioned previously that in my early days, I did a lot of work on user interface technologies, and had a strong interest in human-computer interaction during college. My first introduction to user-centered design techniques and viewing the end user as a partner in the process was in the summer of 1993. That was 14 years ago, and yet I’d have to say that in general, IT still operates in a supplier role, with things thrown back and forth over the business/IT wall. I really liked the emphasis on communication in Chapter 4 of the book. If the continued prevalence of IT as a supplier mentality is due to a fundamental lack of trust, the only thing that will eventually break it down is communication. I’ve certainly been guilty of falling into the typical technologist mode of communicating: email. As they call out, it’s time to starting getting out of our chairs, out of our comfort zones, and start communicating. If you don’t know who to begin your conversations with, seek someone out that can help you with that.

I had the experience of participating in an exercise directed by a CIO where we were split into groups of 8 people, and then furthered subdivided into two groups of 4 seated at separate tables. Each table had a task to accomplish as outlined on a piece of paper. All tasks were identical, and each set of 8 people had identical equipment at their table to complete the task. The goal of the task was to ensure that each sub-group of 4 built identical solutions. If you’ve seen Apollo 13, this whole thing was prefaced by a video clip from the movie where the engineers at Mission Control dump a box full of stuff on the table and have to figure out a way to build a carbon dioxide scrubber out of it. They then have to get the Apollo 13 astronauts to do the same. The interesting thing about this task was that the instructions were not very limiting. For example, there was nothing that said one group of four couldn’t get up and go sit with the other four and complete the whole thing together. The point of the exercise was that we set many artificial boundaries in our work based on past experiences, culture, etc. In fact, there are probably more artificial boundaries than real boundaries. Does your company have a stated policy that you can’t go and talk to an end user on the business side? If they don’t, there’s nothing preventing you from doing that. If we’re going to begin building trust back up in the IT/Business relationship, it is time to step outside of the box and start communicating as peers. Let’s not wait another 14 years to start making a change.

Great discussion on non-functional aspects of SOA

Great discussion on non-functional aspects of SOA

Ron Jacobs had a great discussion with Mark Baciak on ARCast over the course of two podcasts (here and here). In the second one, they discuss Mark’s Alchemy framework. It is essentially an interceptor-based architecture coupled with a centralized store and analytic engine. In my “Converging in the middle” post, I talked about the need for mediation capabilities that address the non-functional aspects of service interactions. Now, most people may see the list and think that an ESB or an XML/Web Service Gateway is the way to go about it. In these podcasts, Mark goes over their system which provides these capabilities, yet through a “smart node” approach. That is, the execution container of both the consumer and the provider is augmented with interceptors that can now act as an intermediary, just as a gateway in the network can.

The smart network versus smart node discussion falls into the category of religious debates. Truth be told, both ways (and the third way, which is a hybrid between the two) can be successful. There is no right or wrong way in the general sense. What you need to do is understand which effort is most likely to produce the operational model you desire. A smart node approach tends to (but doesn’t have to) favor developers. A smart network model tends to (but doesn’t have to) favor operations. As a case in point, I’ve seen a smart network approach that would not be feasible without developer activity for every change, and I’ve seen a smart node approach that was very policy-driven and operations-friendly. So, as an alternate view on the whole problem space, I encourage you to listen to the podcasts.

SOA and GCM (Governance and Compliance)

SOA and GCM (Governance and Compliance)

I just listened to the latest Briefings Direct: SOA Insights podcast from Dana Gardner and friends. In this edition, the bulk of the time was spent discussing the relationship between SOA Governance and tools in the Governance and Compliance market (GCM).

I found this discussion very interesting, even if they didn’t make too many connections to the products classifying themselves as “SOA Governance” solutions. That’s not surprising though, because there’s no doubt that the marketers jumped all over the term governance in an effort to increase sales. Truth be told, there is a long, long way to go in connecting the two sets of technologies.

I’m not all that familiar with the GCM space, but the discussion did help to educate me. The GCM space is focused on corporate governance, clearly targeting the Sarbanes-Oxley space. There is no doubt that many, many dollars are spent within organizations in staying compliant with local, state, and federal (or your area’s equivalent) regulations. Executives are required to sign off that appropriate controls are in place. I’ve had experience in the financial services industry, and there’s no shortage of regulations that deal with handling investor’s assets, and no shortage of lawsuits when someone feels that their investment intent has not been followed. Corporate governance doesn’t end there, however. In addition to the external regulations, there are also the internal principles of the organization that govern how the company utilizes its resources. Controls must be put in place to provide documented assurances that resources are being used in the way they were intended. This frequently takes the form of someone reviewing some report or request for approval and signing their name on the dotted line. For these scenarios, there’s a natural relationship between analytics, business intelligence, and data warehouse products, and the GCM space appears to have ties to this area.

So where does SOA governance fit into this space? Clearly, the tools that are claiming to be players in the governance space don’t have strong ties to corporate governance. While automated checking of a WSDL file for WS-I adherence is a good thing, I don’t think it’s something that will need to show up in a SOX report anytime soon. Don’t get me wrong, I’m a fan of what these tools can offer but be cautious in thinking that the governance they claim has strong ties to your corporate governance. Even if we look at the financial aspect of projects, the tools still have a long way to go. Where do most organizations get the financial information? Probably from their project management and time accounting system. Is there integration between these tools, your source code management system, and your registry/repository? I know that BEA AquaLogic Enterprise Repository (Flashline) had the ability to track asset development costs and asset integration costs to provide an ROI for individual assets, but where do these cost numbers come from? Are they manually entered, or are they pulled directly from the systems of record?

Ultimately, the relationship between SOA Governance and Corporate Governance will come down to data. In a couple recent posts, I discussed the challenges that organizations may face with the metadata associated with SOA, as well as the management continuum. This is where these two worlds come together. I mentioned earlier that a lot of corporate governance is associated with the right people reviewing and signing off on reports. A challenge with systems of the past is their monolithic nature. Are we able to collect the right data from these systems to properly maintain appropriate controls? Clearly, SOA should break down these monoliths and increase the visibility into the technology component of the business processes. The management architecture must allow metrics and other metadata to be collected, analyzed, and reported to allow the controllers to make better decisions.

One final comment that I didn’t want to get lost. Neil Macehiter brought up Identity Management a couple times in the discussion, and I want to do my part to ensure it isn’t forgotten. I’ve mentioned “signoff” a couple times in this entry. Obviously, signoff requires identity. Where compliance checks are supported by a service-enabled GCM product, having identity on those service calls is critical. One of the things the controller needs to see is who did what. If I’m relying on metadata from my IT infrastructure to provide this information, I need to ensure that the appropriate identity stays with those activities. While there’s no shortage of rants against WS-*, we clearly will need a transport-independent way of sharing identity as it flows through the various technology components of tomorrow’s solutions.

The vendor carousel continues to spin…

The vendor carousel continues to spin…

It’s not an acquisition this time, but a rebranding/reselling agreement between BEA and AmberPoint. I was head down in my work and hadn’t seen this announcement until Google Alerts kindly informed me of a new link from James Urquhart, a blogger on Service Level Automation whose writings I follow. He asked what I think of this announcement, so I thought I’d oblige.

I’ve never been an industry analyst in the formal sense, so I don’t get invited to briefings, receive press releases, or whatever the other normal mechanisms (if there are any) that analysts use. I am best thought of as an industry observer, offering my own opinions based on my experience. I have some experience with both BEA and AmberPoint, so I do have some opinions on this. 🙂

Clearly, BEA must have customers asking about SOA management solutions. BEA doesn’t have an enterprise management solution like HP or IBM. Even if we just consider BEA products themselves, I don’t know whether they have a unified management solution across all of their products. So, there’s definitely the potential for AmberPoint technology to provide benefits to the BEA platform and customers must be asking about it. If this is the case, you may be wondering why didn’t BEA just acquire AmberPoint? First, AmberPoint has always had a strong relationship with Microsoft. I have no idea how much this results in sales for them, but clearly an outright acquisition by BEA could jeopardize that channel. Second, as I mentioned, BEA doesn’t have an enterprise management offering of which I’m aware. AmberPoint can be considered a niche management solution. It provides excellent service/SOA management, but it’s not going to allow you to also manage you physical servers and network infrastructure. So, this doesn’t wouldn’t sense on either side. BEA wouldn’t gain entry into that market, and AmberPoint would be at risk of losing customers as their message could get diluted by the rest of the BEA offerings.

As a case in point, you don’t see a lot of press about Oracle Web Services Manager these days. Oracle acquired this technology when they acquired Oblix (who acquired it when they acquired Confluent). I don’t consider Oracle a player in enterprise systems management, and as a result, I don’t think people think of Oracle when they’re thinking about Web Services Management. They’re probably more likely to think of the big boys (HP, IBM, CA) and the specialty players (AmberPoint, Progress Actional).

So, who’s getting the best out of this deal? Personally, I think this is great win for AmberPoint. It extends a sales channel for them, and is consistent with the approach they’ve taken in the past. Reselling agreements can provide strength to these smaller companies, as it builds on a perception of the smaller company as either being a market leader, having great technology, or both. On the BEA side, it does allow them to offer one-stop solutions directly in response to SOA-related RFPs, and I presume that BEA hopes it will result in more services work. BEA’s governance solution is certainly not going to work out of the box since it consists of two rebranded products (AmberPoint and HP/Mercury/Systinet) and one recently acquired product (Flashline). All of that would need to be integrated with their core execution platform. It will help BEA with existing customers who don’t want to deal with another vendor but desire an SOA management solution, but BEA has to ensure that there are integration benefits rather than just having the BEA brand.

The management continuum

The management continuum

Mark Palmer of Apama continued his series of posts on myths around the EDA/CEP space, with number 3: BAM and BPM are Converging. Mark hit on a subject that I’ve spoken with clients about, however, I don’t believe that I’ve ever posted on it.

Mark’s premise is that it’s not BAM and BPM that are converging, it’s BAM and EDA. Converging probably isn’t the right word here, as it implies that the two will become one, which certainly isn’t the case. That wasn’t Mark’s point, either. His point was that BAM will leverage CEP and EDA. This, I completely agree with.

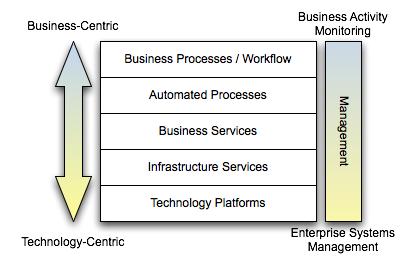

You can take a view on our solutions like the one below. At higher levels, the concepts we’re dealing with are more business-centric. At lower levels, the concepts are more technology-centric. Another way of looking at it is that at the higher levels, the products involved would be specific to the line of business/vertical we’re dealing with. At the lower levels, the products involved would be more generic, applicable to nearly any vertical. For example, an insurance provider may have things like quoting and underwriting at the top, but at the bottom, we’d have servers, switches, etc. Clearly, the use of servers are not specific to the insurance industry.

All of these platforms require some form of management and monitoring. At the lowest levels of the diagram, we’re interested in traditional Enterprise Systems Management (ESM). The systems would be getting data on CPU load, memory usage, etc. and technologies like SNMP would be involved. One could certainly argue that these ESM tools are very event-drvien. The collection of metrics and alerts is nearly always done asynchronously. If we move up the stack, we get to business activity monitoring. The interesting thing is that the fundamental architecture of what is needed does not change. Really, the only thing that changes is the semantics of the information that needs to get pushed out. Rather than pushing CPU load, I may be pushing out the number of auto insurance quotes requested and processed. This is where Mark is right on the button. If the underlying systems are pushing out events, whether at a technical level or at a business level, there’s no reason why CEP can’t be applied to that stream to deliver back valuable information to the enterprise, or even better, coming full circle and invoking some automated process to take action.

I think that the most important takeaway on this is that you have to be thinking from an architectural standpoint as you build these things out. This isn’t about running out and buying a BAM tool, a BPM tool, a CEP tool, or anything else. What metrics are important? How will the metrics be collected? How do you want to perform analytics (is static analysis against a centralized store enough, or do you need dynamic analysis in realtime driven by changing business rules)? What do you want to do with the results of that analysis? Establishing a management architecture will help you make the right decisions on what products you need to support it.