Archive for the ‘Infrastructure’ Category

Most popular posts to date

Most popular posts to date

It’s funny how these syndicated feeds can be just like syndicated TV. I’ve decided to leverage Google Analytics and create a post with links to the most popular entries since January 2006. My blog isn’t really a diary of activities, but a collection of opinions and advice that hopefully remain relevant. While the occasional Google search will lead you to find many of these, many of these items have long since dropped off the normal RSS feed. So, much like the long-running TV shows like to clip together a “best of” show, here’s my “best of” entry according to Google Analytics.

- Barriers to SOA Adoption: This was originally posted on May 4, 2007, and was in response to a ZapThink ZapFlash on the subject.

- Reusing reuse…: This was originally posted on August 30, 2006, and discusses how SOA should not be sold purely as a means to achieve reuse.

- Service Taxonomy: This was originally posted on December 18, 2006 and was my 100th post. It discusses the importance and challenges of developing a service taxonomy.

- Is the SOA Suite good or bad? This was originally posted on March 15, 2007 and stresses that whatever infrastructure you select (suite or best-of-breed), the important factor is that it fit within a vendor-independent target architecture.

- Well defined interfaces: This post is the oldest one on the list, from February 24, 2006. It discusses what I believe is the important factor in creating a well-defined interface.

- Uptake of Complex Event Processing (CEP): This post from February 19, 2007 discusses my thoughts on the pace that major enterprises will take up CEP technologies and certainly raised some interesting debate from some CEP vendors.

- Master Metadata/Policy Management: This post from March 26, 2007 discusses the increasing problem of managing policies and metadata, and the number of metadata repositories than may exist in an enterprise.

- The Power of the Feedback Loop: This post from January 5, 2007 was one of my favorites. I think it’s the first time that a cow-powered dairy farm was compared to enterprise IT.

- The expanding world of the “repistry”: This post from August 25, 2006 discusses registries, repositories, CMDBs and the like.

- Preparing the IT Organization for SOA: This is a June 20, 2006 response to a question posted by Brenda Michelson on her eBizQ blog, which was encouraging a discussion around Business Driven Architecture.

- SOA Maturity Model: This post on February 15, 2007 opened up a short-lived debate on maturity models, but this is certainly a topic of interested to many enterprises.

- SOA and Virtualization: This post from December 11, 2006 tried to give some ideas on where there was a connection between SOA and virtualization technologies. It’s surprising to me that this post is in the top 5, because you’d think the two would be an apples and oranges type of discussion.

- Top-Down, Bottom-Up, Middle-Out, Outside-In, Chicken, Egg, whatever: Probably one of the longest titles I’ve had, this post from June 6, 2006 discusses the many different ways that SOA and BPM can be approached, ultimately stating that the two are inseparable.

- Converging in the middle: This post from October 26, 2006 discusses my whole take on the “in the middle” capabilities that may be needed as part of SOA adoption along with a view of how the different vendors are coming at it, whether through an ESB, an appliance, EAI, BPM, WSM, etc. I gave a talk on this subject at Catalyst 2006, and it’s nice to see that the topic is still appealing to many.

- SOA and EA… This post on November 6, 2006 discussed the perceived differences between traditional EA practitioners and SOA adoption efforts.

Hopefully, you’ll give some of these older items a read. Just as I encouraged in my feedback loop post, I do leverage Google Analytics to see what people are reading, and to see what items have staying power. There’s always a spike when an entry is first posted (e.g. my iPhone review), and links from other sites always boost things up. Once a post has been up for a month, it’s good to go back and see what people are still finding through searches, etc.

Are you SOA compliant?

Are you SOA compliant?

There was a question on the Yahoo SOA group on the notion of SOA compliance, jokingly introduced in this blog from January of 2006 discussing SOA compliant toasters and cell phones. The important question was whether there is a notion of “SOA compliance” or not.

I fall in the camp of saying yes. There won’t be any branding initiative associated with it, however, because it’s not about compliance with some general notion of what SOA is (such as having a product that exposes Web Services or REST interfaces), it’s about a solution’s ability to fit within the SOA of its customers.

It is certainly true that many enterprises are moving toward a “buy” strategy instead of a “build” strategy. This doesn’t mean that they don’t have any work in planning out their SOA, however. Organizations that simply accept what the vendors give them are going to have difficulty in performing strategic architectural planning. The typical IT thinking may be that this is simply an integration problem, but it’s deeper than that.

Previously, I had a post on horizontal and vertical thinking. In this discussion, I called out that an organization needs to understand what domains of functionality are horizontal, and what domains are vertical. When looking at vendors, if their model doesn’t match up with the organization’s model, conflict will occur. It may not be at an integration level, it may be at a higher semantic level where the way the business wants to use a tool just doesn’t match with the way the vendor chose to provide those capabilities. Having a domain model of the business functionality provides a way to quantitatively assess (or at least get a step closer to it) the ability of a product to be successful in the enterprise. This is the notion of SOA compliance, in my opinion.

On the vendor side of the equation, the vendor needs to be able to express their capability in the form of functionality model that can easily demonstrate how it would fit into a company’s SOA. This applies whether it’s a hosted solution or a shrink-wrapped product. Any vendor that’s using SOA in its marketing efforts but can’t express this should be viewed with caution. After all, architectural compliance is only one factor in the equation, however I think it’s one that can have strategic, rather than short-term implications.

In my experience, neither side is very well positioned to allow a quantitative assessment of SOA compliance. Both parties lack the artifacts necessary, and there certainly aren’t any standards around it. Even just having Visio diagrams with rectangles labeled “such-and-such service” would be an improvement in many cases. More often than not, the vendors win out for a simple reason: they have a marketing department, whereas the typical enterprise architecture organization does not. You can assume that a vendor has at least created some collateral for intelligent conversations with Enterprise Architects, however, you can’t assume that an enterprise has created collateral for intelligent conversations with vendors. It needs to go both ways.

Tactical Solutions

Tactical Solutions

Simon Brown, on his blog “Coding the Architecture,” had a good, short post on tactical solutions. One of the points he made that I liked was this:

For me, a tactical solution can be thought of as a stopgap. It’s an interim solution. It’s something potentially quick and dirty. It satisfies a very immediate need. Importantly, it has a limited lifespan.

It’s the last sentence that hits home. If you’re calling something tactical, you’d better have a date on the books as to when the solution will be replaced. That’s not easy to do, especially considering how long the typical IT project takes and when funding decisions are made. I had one project where I went to my supervisor and asked him, “Are you okay with paying $$$ for this now, knowing that we will replace it in 18 months to 24 months?” He said okay, and while the real timeline wound up being about 36 months, the replacement eventually did happen. That’s probably the closest I’ve come to truly having a tactical solution. At the time the solution was put in place, a placeholder was put in place for the strategic solution and the process for obtaining funding began.

This brings up a great point for the domain of enterprise architecture. Many architects out there spend a lot of time establishing a “future state architecture.” Often, the future state is a pristine of view on how we want things to be. What doesn’t happen, however, are statements regarding everything that exists today. I’m a big fan of making things as explicit as possible. When I’ve worked on future state architecture, I always ask about the current platforms and what will happen to them. Either they’re an approved part of the future state (and they can be classified as not available for new solutions), or there needs to be a project proposed to migrate to something that is approved. What you don’t want to do is leave something in limbo where no one knows whether it’s part of the architecture or not. The same holds true for so-called tactical solutions. Unless there’s an approved project to replace it with the strategic solution, it’s not tactical, it’s the solution. Don’t let it linger in limbo.

Horizontal and Vertical Thinking, part 2

Horizontal and Vertical Thinking, part 2

John Wu commented on my horizontal and vertical thinking post earlier in the week, and I felt it warranted a followup post. He stated:

Enterprise Architecture is a horizontal thinking while application development is a vertical thinking.

While I understand where John was coming from, I think that this approach is only appropriate at the very early stages of an EA practice. The first problem that an organization may face is that no one is thinking horizontally. This may go all the way down to the infrastructure level. Projects are always structured as a top-to-bottom vertical solution. While there may be individuals that are calling out some horizontal needs, unless the organization formally makes it someone’s responsibility to be thinking that way, it will have difficulty gaining traction.

Unfortunately, simply creating an EA organization that thinks horizontally and still letting the project efforts think vertically is not going to fix the problem. If anything, it will introduce tension in the organization, with developers claiming EA is an ivory tower and EA claiming that developers are a bunch of rogues that are doing whatever they want in the name of the project schedule.

If we characterize where the organization needs to go, it’s where both EA and the development organization are thinking both vertically and horizontally. This does come back to governance. Governance is about decision making principles to elicit the desired behavior. The governance policies should help an organization decide what things are horizontal, what things are vertical, and then assign people to work on those efforts within those architectural boundaries. Right now, many organizations are letting project definitions establish architectural boundaries rather than having architectural boundaries first, and then projects within those boundaries. Project boundaries are artificial, and end when the project ends. Architectural boundaries, while they may change over time, should not be tied to the lifecycle of a project.

So, EA should be concerned with both the vertical and the horizontal nature of IT solutions. Based upon the corporate objectives, they should know where it is appropriate to leverage existing, horizontal solutions and where it is appropriate to cede more control (although maintaining architectural consistency) to a vertical domain. Two systems that have some redundant capabilities but are architecturally consistent at least create the potential for a consolidation at some later point in time, when the corporate objectives and policies change. In order to do this, the project staff must also be aware of both the vertical and horizontal nature of IT solutions.

Great discussion on non-functional aspects of SOA

Great discussion on non-functional aspects of SOA

Ron Jacobs had a great discussion with Mark Baciak on ARCast over the course of two podcasts (here and here). In the second one, they discuss Mark’s Alchemy framework. It is essentially an interceptor-based architecture coupled with a centralized store and analytic engine. In my “Converging in the middle” post, I talked about the need for mediation capabilities that address the non-functional aspects of service interactions. Now, most people may see the list and think that an ESB or an XML/Web Service Gateway is the way to go about it. In these podcasts, Mark goes over their system which provides these capabilities, yet through a “smart node” approach. That is, the execution container of both the consumer and the provider is augmented with interceptors that can now act as an intermediary, just as a gateway in the network can.

The smart network versus smart node discussion falls into the category of religious debates. Truth be told, both ways (and the third way, which is a hybrid between the two) can be successful. There is no right or wrong way in the general sense. What you need to do is understand which effort is most likely to produce the operational model you desire. A smart node approach tends to (but doesn’t have to) favor developers. A smart network model tends to (but doesn’t have to) favor operations. As a case in point, I’ve seen a smart network approach that would not be feasible without developer activity for every change, and I’ve seen a smart node approach that was very policy-driven and operations-friendly. So, as an alternate view on the whole problem space, I encourage you to listen to the podcasts.

The vendor carousel continues to spin…

The vendor carousel continues to spin…

It’s not an acquisition this time, but a rebranding/reselling agreement between BEA and AmberPoint. I was head down in my work and hadn’t seen this announcement until Google Alerts kindly informed me of a new link from James Urquhart, a blogger on Service Level Automation whose writings I follow. He asked what I think of this announcement, so I thought I’d oblige.

I’ve never been an industry analyst in the formal sense, so I don’t get invited to briefings, receive press releases, or whatever the other normal mechanisms (if there are any) that analysts use. I am best thought of as an industry observer, offering my own opinions based on my experience. I have some experience with both BEA and AmberPoint, so I do have some opinions on this. 🙂

Clearly, BEA must have customers asking about SOA management solutions. BEA doesn’t have an enterprise management solution like HP or IBM. Even if we just consider BEA products themselves, I don’t know whether they have a unified management solution across all of their products. So, there’s definitely the potential for AmberPoint technology to provide benefits to the BEA platform and customers must be asking about it. If this is the case, you may be wondering why didn’t BEA just acquire AmberPoint? First, AmberPoint has always had a strong relationship with Microsoft. I have no idea how much this results in sales for them, but clearly an outright acquisition by BEA could jeopardize that channel. Second, as I mentioned, BEA doesn’t have an enterprise management offering of which I’m aware. AmberPoint can be considered a niche management solution. It provides excellent service/SOA management, but it’s not going to allow you to also manage you physical servers and network infrastructure. So, this doesn’t wouldn’t sense on either side. BEA wouldn’t gain entry into that market, and AmberPoint would be at risk of losing customers as their message could get diluted by the rest of the BEA offerings.

As a case in point, you don’t see a lot of press about Oracle Web Services Manager these days. Oracle acquired this technology when they acquired Oblix (who acquired it when they acquired Confluent). I don’t consider Oracle a player in enterprise systems management, and as a result, I don’t think people think of Oracle when they’re thinking about Web Services Management. They’re probably more likely to think of the big boys (HP, IBM, CA) and the specialty players (AmberPoint, Progress Actional).

So, who’s getting the best out of this deal? Personally, I think this is great win for AmberPoint. It extends a sales channel for them, and is consistent with the approach they’ve taken in the past. Reselling agreements can provide strength to these smaller companies, as it builds on a perception of the smaller company as either being a market leader, having great technology, or both. On the BEA side, it does allow them to offer one-stop solutions directly in response to SOA-related RFPs, and I presume that BEA hopes it will result in more services work. BEA’s governance solution is certainly not going to work out of the box since it consists of two rebranded products (AmberPoint and HP/Mercury/Systinet) and one recently acquired product (Flashline). All of that would need to be integrated with their core execution platform. It will help BEA with existing customers who don’t want to deal with another vendor but desire an SOA management solution, but BEA has to ensure that there are integration benefits rather than just having the BEA brand.

More vendor movement…

More vendor movement…

SoftwareAG is acquiring webMethods. Interestingly, Dana Garnder’s analysis of the deal seems to imply that webMethods earlier acquisition of Infravio may have made them more attractive, but given that SoftwareAG had a solution already, CentraSite, I wouldn’t think that was the case. It is true, however, that CentraSite hasn’t received a lot of media attention. I wonder what this now means for Infravio. Clearly, SoftwareAG has two solutions in one problem space, so some consolidation is likely to occur.

Dana’s analysis points out that “bigger is better in terms of SOA solutions provider survival.” This is an interesting observation, although only time will tell. The larger best-of-breed players are expanding their offerings, but will they be viewed as platform players by consumers? It’s very interesting to look at the space at this point. At the top, you’ve got companies like IBM and Oracle who clearly are full platform vendors. It’s in the middle where things get really messy. You have everything from companies with large enough customer bases to be viewed in a strategic light to clear niche players. This includes such names BEA, HP, Sun, Tibco, Progress (which includes Sonic, Actional, Apama, and a few others), SOA Software, Iona, CapeClear, AmberPoint, Skyway Software, iWay Software, Appistry, Cassatt, Lombardi, Intalio, Vitria, Savvion, and too many more to name. Clearly, there’s plenty of other fish out there, and consolidation will continue.

What we’re seeing is that you can’t simply buy SOA. There also isn’t one single piece of infrastructure required for SOA. Full SOA adoption will require looking at nearly every aspect of your infrastructure. As a result, companies that are trying to build a marketing strategy around SOA are going to have a hard time now that the average customer is becoming more educated. That means one of two things: increase your offerings or narrow your marketing message. If you narrow the message, you run the risk of becoming insignificant, struggling to gain mindshare with the rest of the niche players. Thus, we enter the stage of eat or be eaten for all of those companies that don’t have a cash cow to keep them happy in their niche. As we’ve seen however, even the companies that do have significant recurring revenue can’t sit still. That’s life as a technology vendor, I guess.

Update: Beth Gold-Bernstein posted a very nice entry discussing this acquisition including a discussion of the areas of overlap, including the Infravio/CentraSite item. It also helped me to know where SoftwareAG now fits into this whole picture, since I honestly didn’t know all that much about them since the bulk of their business is in Europe.

The Reuse Marketplace

The Reuse Marketplace

Marcia Kaufman, a partner with Hurwitz & Associates, posted an article on IT-Director.com entitled “The Risks and Rewards of Reuse.” It’s a good article, and the three recommendations can really be summed up in one word: governance. While governance is certainly important, the article misses out on another important, perhaps more important, factor: marketing.

When discussing reuse, I always refer back to a presentation I heard at SD East way back in 1998. Unfortunately, I don’t recall the speaker, but he had established reuse programs at a variety of enterprises, some successful and some not successful. He indicated that the factor that influenced success the most was marketing. If the groups that had reusable components/services/whatever were able to do an effective job in marketing their goods and getting the word out, the reuse program as a whole would be more successful.

Focusing in on governance alone still means those service owners are sitting back and waiting for customers to show up. While the architectural governance committee will hopefully catch a good number of potential customers and send them in the direction of the service owner, that committee should be striving to reach “rubber stamp” status, meaning the project teams should have already sought out potential services for reuse. This means that the service owners need to be marketing their services effectively so that they get found in the first place. I imagine the potential customer using Google searches on the service catalog, but then within the service catalog, you’d have a very Amazon-like feel that may say things like “30% of other customers found this service interesting…” Service owners would be monitoring this data to understand why consumers are or are not using their services. They’d be able to see why particular searches matched, what information the customer looked at, and know whether the customer eventually decided to use the service/resource or not. Interestingly, this is exactly what companies like Flashline and ComponentSource were trying to do back in the 2000 timeframe, with Flashline having a product to establish your own internal “marketplace” while ComponentSource was much more of a hosted solution intended at a community broader than the enterprise. With the potential to utilize hosted services always on the rise, this makes it even more interesting, because you may want your service catalog to show you both internally created solutions, as well as potential hosted solutions. Think of it as amazon.com on the inside + with amazon partner content integrated from the outside. I don’t know how easily one could go about doing this, however. While there are vendors looking at UDDI federation, what I’ve seen has been focused on internal federation within an enterprise. Have any of these vendors worked with say, StrikeIron, so that hosted services show up in their repository (if the customer has configured it to allow them)? Again, it would be very similar to amazon.com. When you search for something on Amazon, you get some items that come from amazon’s inventory. You also get links to Amazon partners that have the same products, or even products that are only available from partners.

This is a great conceptual model, however, I do need to be a realist regarding the potential of such a robust tool today. How many enterprises have a service library large enough to warrant establishing this rich of a marketplace-like infrastructure? Fortunately, I do think this can work. Reuse is about much more than services. If all of your reuse is targeted at services, you’re taking a big risk with your overall performance. A reuse program should address not only service reuse, but also reuse of component libraries, whether internal corporate libraries or third-party libraries, and even shared infrastructure. If your program addresses all IT resources that have the potential for reuse, now the inventory may be large enough to warrant an investment in such a marketplace. Just make sure that it’s more than just a big catalog. It should provide benefit not only for the consumer, but for the provider as well.

The management continuum

The management continuum

Mark Palmer of Apama continued his series of posts on myths around the EDA/CEP space, with number 3: BAM and BPM are Converging. Mark hit on a subject that I’ve spoken with clients about, however, I don’t believe that I’ve ever posted on it.

Mark’s premise is that it’s not BAM and BPM that are converging, it’s BAM and EDA. Converging probably isn’t the right word here, as it implies that the two will become one, which certainly isn’t the case. That wasn’t Mark’s point, either. His point was that BAM will leverage CEP and EDA. This, I completely agree with.

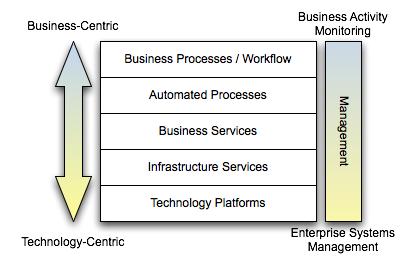

You can take a view on our solutions like the one below. At higher levels, the concepts we’re dealing with are more business-centric. At lower levels, the concepts are more technology-centric. Another way of looking at it is that at the higher levels, the products involved would be specific to the line of business/vertical we’re dealing with. At the lower levels, the products involved would be more generic, applicable to nearly any vertical. For example, an insurance provider may have things like quoting and underwriting at the top, but at the bottom, we’d have servers, switches, etc. Clearly, the use of servers are not specific to the insurance industry.

All of these platforms require some form of management and monitoring. At the lowest levels of the diagram, we’re interested in traditional Enterprise Systems Management (ESM). The systems would be getting data on CPU load, memory usage, etc. and technologies like SNMP would be involved. One could certainly argue that these ESM tools are very event-drvien. The collection of metrics and alerts is nearly always done asynchronously. If we move up the stack, we get to business activity monitoring. The interesting thing is that the fundamental architecture of what is needed does not change. Really, the only thing that changes is the semantics of the information that needs to get pushed out. Rather than pushing CPU load, I may be pushing out the number of auto insurance quotes requested and processed. This is where Mark is right on the button. If the underlying systems are pushing out events, whether at a technical level or at a business level, there’s no reason why CEP can’t be applied to that stream to deliver back valuable information to the enterprise, or even better, coming full circle and invoking some automated process to take action.

I think that the most important takeaway on this is that you have to be thinking from an architectural standpoint as you build these things out. This isn’t about running out and buying a BAM tool, a BPM tool, a CEP tool, or anything else. What metrics are important? How will the metrics be collected? How do you want to perform analytics (is static analysis against a centralized store enough, or do you need dynamic analysis in realtime driven by changing business rules)? What do you want to do with the results of that analysis? Establishing a management architecture will help you make the right decisions on what products you need to support it.

Master Metadata/Policy Management

Master Metadata/Policy Management

Courtesy of Dana Gardner’s blog, I found out that IONA has announced a registry/repository product, Artix Registry/Repository.

I’m curious if this is indicative of a broader trend. First, you had acquisitions of the two most prominent players in the registry/repository space: Systinet by Mercury who was then acquired by HP, and Infravio by webMethods. For the record, Flashline was also acquired by BEA. You’ve had announcements of registry/repository solutions as part of a broader suite by IBM (WebSphere Registry/Repository), SOA Software (Registry), and now IONA. There’s also Fujitsu’s/Software AG CentraSite and LogicLibrary Logidex that are still primarily independent players. What I’m wondering is whether or not the registry/repository marketplace simply can’t make it as an independent purchase, but will always be a mandatory add-on to any SOA infrastructure stack.

All SOA infrastructure products have some form of internal repository. Whether we’re talking about a WSM system, an XML/Web Services gateway, or an ESB, they all maintain some internal configuration that governs what they do. You could even lump application servers and BPM engines into that mix if you so desire. Given the absence of domain specific policy languages for service metadata, this isn’t surprising. So, given that every piece of infrastructure has its own internal store, how do you pick one to be the “metadata master” of your policy information? Would someone buy a standalone product solely for that purpose? Or are they going to pick a product that works with the majority of their infrastructure, and then focus on integration with the rest. For the smaller vendors, it will mean that they have to add interoperability/federation capabilities with the platform players, because that’s what customers will demand. The risk for the consumer, however, is that this won’t happen. This means that the consumer will be the one to bear the brunt of the integration costs.

I worry that the SOA policy/metadata management space will become no better than the Identity Management space. How many vendor products still maintain proprietary identity stores rather than allowing identity to be managed externally through the use of ActiveDirectory/LDAP and some Identity Management and Provisioning solution? This results in expensive synchronization and replication problems that keep the IT staff from being focused on things that make a difference to the business. Federation and interoperability across these registry/repository platforms must be more than a checkbox on someone’s marketing sheet, it must be demonstrable, supported, and demanded by customers as part of their product selection process. The last thing we need is a scenario where Master Data Management technologies are required to manage the policies and metadata of services. Let’s get it right from the beginning.

SOA and Enterprise Security

SOA and Enterprise Security

James McGovern asked a number of us in the blogosphere if we’d be willing to share some thoughts on security and SOA. First, I recommend you go and read James’ post. He makes the claim that if you’ve adopted SOA successfully, it should make security, such as user-centric identity, single signon, on and off boarding employees, asset management, etc. easier. I completely agree. I’ve yet to encounter an organization that’s reached that point with SOA, but if they did, I think James’ claims should hold true. Now on to the subject at hand, however.

I’ve shared some thoughts on security in the past, particularly in my post “The Importance of Identity.” Admittedly, however, it probably falls into the high level category. I actually look to James’ posts on security, XACML, etc. to fill in gaps in my own knowledge, as it’s not an area where I have a lot of depth. I’m always up for a challenge, however, and this space clearly is a challenge.

Frankly, I think security falls only slightly ahead of management when it comes to things that haven’t received proper attention. We can thank the Internet and some high profile security problems for elevating it’s importance in the enterprise. Unfortunately, security suffers from the same baggage as the rest of IT. Interestingly, though, security technology probably took a step backward when we moved off of mainframes and into the world of client-server. Because there was often a physical wire connecting that dumb terminal to the big iron, you had identity. Then along came client-server and N-tier systems with application servers, proxy servers, etc. and all of a sudden, we’ve completely lost the ability to trace requests through the system. Not only did applications have no concept of identity, the underlying programming languages didn’t have any concept of identity, either. The underlying operating system did, but what good is it to know something is running as www?

James often laments the fact that so many systems (he likes to pick on ECM) still lack the ability to leverage an external identity management system, and instead have their own proprietary identity stores and management. He’s absolutely on the mark with this. Identity management is the cornerstone of security, in my opinion. I spent a lot of time working with an enterprise security architect discussing the use of SSL versus WS-Security, the different WS-Security profiles, etc. In the end, all of that was moot until we figured out how to get identity into the processing threads to begin with! Yes, .NET and Java both have the concept of a Principal. How about your nice graphical orchestration engine? Is identity part of the default schema that is the context for the process execution? I’m guessing that it isn’t, which means more work for your developers.

So, unfortunately, all I can do is point out some potential pitfalls at this point. I haven’t had the opportunity to go deep in this space, yet, but hopefully this is enough information to get you thinking about the problems that lie ahead.

Is the SOA Suite good or bad?

Is the SOA Suite good or bad?

I haven’t listed to the podcast yet, but Joe McKendrick posted a summary of the discussion in a recent Briefings Direct SOA Insights conversation organized by Dana Gardner. In his entry, Joe asks whether vendors are promoting an oxymoron in offering SOA suites. He states:

“Jumbo shrimp” and “government organization” are classic examples of oxymorons, but is the idea of an “SOA suite” also just as much a contradiction of terms? After all, SOA is not supposed to be about suites, bundles, integration packages, or anything else that smacks of vendor lock-in.

“The big guys — SAP, Oracle, Microsoft, webMethods, lots of software vendors — are saying, ‘Hey, we provide a bigger, badder SOA suite than the next guy,'” Jim Kobelius pointed out. “That raises an alarm bell in my mind, or it’s an anomaly or oxymoron, because when you think of SOA, you think of loose coupling and virtualization of application functionality across a heterogeneous environment. Isn’t this notion of a SOA suite from a single vendor getting us back into the monolithic days of yore?”

Personally, I have no issue with SOA suites. The big vendors are always going to go down this route, and if anything, it simply demonstrates just how far we have to go on the open integration front. If you follow this blog, you know that I’ve discussed SOA for IT. SOA for IT, in my mind, is all about integration across the technology infrastructure at a horizontal level, not a vertical level. SOA for business is concerned about the vertical level semantics of the business, and allowing things to integrate at a business sense. SOA for IT is about integration at the technical level. Can my Service Management infrastructure talk to my Service Execution infrastructure? Can my Service Execution infrastructure talk to my Service Mediation infrastructure? Can my Service Mediation infrastructure talk to my Service Management infrastrucutre? The list goes on. Why is their still a need for these SOA suites? Simply put, we still lack standards for communication between these platforms. It’s one thing to say all of the infrastructure knows how to speak with a UDDI v3 registry. It’s another thing to have the infrastructure agree on the semantics of the metadata placed in a registry/repository (note, there’s no standard repository API), and leverage that information successfully across a heterogeneous set of environments. The smaller vendors try to form coalitions to make this a reality, as was the case with Systinet’s Governance Interoperability Framework, but as they get swallowed up by the big fish, what happens? IBM came out with WebSphere Registry/Repository and it introduced new, proprietary APIs. Competitive advantage for an all IBM environment? Absolutely. If I don’t have an all IBM environment, am I that much worse off however? If I have AmberPoint or Actional for SOA management, I’m still dealing with their proprietary interfaces and policy definitions, so vendor lock-in still exists. I’m just locked in to multiple vendors, rather than one.

The only way this gets fixed is if customers start demanding open standards for technology integration as part of their evaluation criteria. While the semantics of the information exchange may not exist yet, you can at least ask whether or not the vendor exposes management interfaces as services. Put another way, the internal architecture of the product needs to be consistent with the internal architecture of your IT systems. If you desire to have separation of management from enforcement, then your vendor products must expose management services. If the only way to configure their product is through a web-based user interface or by attempting to directly manipulate configuration files, this is going to be very costly for you if you’re trying to reduce the number of independent management console that operations needs to deal with. Even if it’s all IBM, Oracle, Microsoft, or whoever, the internal architecture of that suite needs to be consistent with your vendor-independent target architecture. If you haven’t taken the time to develop one, then you’re allowing the vendors to push their will on you.

Let’s use the city planning analogy. A suite vendor is akin to the major developer. Do the city planners simply say, “Here’s your 80,000 acres, have fun?” That probably wouldn’t result in a good deal for the city. Taking the opposite extreme, the city doesn’t want individual property owners to do whatever they want, either. Last year, there was article about a nearby town that had somehow managed to allow an adult store to set up shop next door to a daycare center in a strip mall. Not good. The right approach, whether you want to have a diverse set of technologies, or a very homogenous set is to keep the power in the hands of the planners, and that is done through architecture. If you can remain true to your architecture with a single vendor? Great. If you prefer to do it with multiple vendors, that’s great at well. Just make sure that you’re setting the rules, not them.

IT in homes, schools

IT in homes, schools

I’ve had some lightweight posts on SOA for the home in the past, and for whatever reason, it seems to be tied to listening to IT Conversations. Well, it’s happened again. In Phil and Scott’s discussion with Jon Udell, they lamented the problems of computers in the home. Phil discussed the issues he’s encountered with replacing servers in his house and moving from 32-bit to 64-bit servers (nearly everything had to be rebuilt, he indicated that he would have been better off sticking with 32-bit servers). Jon and Phil both discussed some of the challenges that they’ve had in helping various relatives with technology.

It was a great conversation and made me think of a recent email exchange concerning my father-in-law’s school. He’s a grade school principal, and I built their web site for them several years ago. They host it themselves, and the computer teacher has done a great job in keeping it humming along. That being said, there’s still room for improvement. Many of the teachers still host their pages externally. My father-in-law sends a letter home with the kids each week that is a number of short paragraphs and items that have occurred throughout the week. Boy, that could easily be syndicated as a blog. Of course, that would require installing WordPress on the server, which while relatively easy for me, is something that could get quite frustrating for someone not used to operating at the command line. Anyway, the email conversation was about upgrading the server. One of the topics that came up was hosting email ourselves. Now, while it’s very easy to set up a mail server, the real concern here comes up with reliability. People aren’t going to be happy if they can’t get to their email. Even if we just look at the website, as it increasingly becomes part of the way the school communicates with the community, it starts to become critical.

When I was working in an enterprise, redundancy was the norm. We had load balancers and failover capabilities. How many people have a hardware load balancer at home? I don’t. You may have a linux box that does this, but it’s still a single point of failure. A search at Amazon really didn’t turn up too many options for the consumer, or even a cash-strapped school for that matter. This really brings up something that will become an increasing concern as we march toward a day where connectivity is ubiquitous. Vendors are talking about the home server, but when corporations have entire staffs dedicated to keeping those same technologies running, how on earth are we going to expect Mom and Pop in Smalltown U.S.A. to be able to handle the problems that will occur?

Think about this. Today, I would argue that most households still have normal phones and answering machines. Why don’t we have the email equivalent? Wouldn’t it be great if I could purchase a $100 device that I just into my network and now have my own email server? Yes, it would be okay if I had to call my Internet provider and say, “please associate this with biske.com” just as I must do when I establish a phone line. What do I do, however, if that device breaks? What if it gets hacked and becomes a zombie device contributing to the dearth of spam on the Internet? How about a device that enables me to share videos and pictures with friends and family? Again, while hosted solutions are nice, it would be far more convenient to merely pull them off the camcorder and digital camera and make it happen. I fully believe that the right thing is to always have a mix of options. Some people will be fine with hosted solutions. Some people will want the control and power of being able to do it themselves, and there’s a marketplace for both. I get tired of these articles that say things like “hosted productivity apps will end the dominance of Microsoft Office.” Phooey. It won’t. It will evolve to somewhere in the middle, rather than one side or the other. Conversations like that are always like a pendulum, and the pendulum always swings back. I’m off on a tangent, here. Back to the topic- we are going to need to make improvements in orders of magnitude on the management of systems today. Listen to the podcast, and here the things that Jon and Phil, two leading technologists that are certainly capable of solving most any problem, lament. Phil gives the example of calls from his wife (I get them as well) that “this thing is broken.” While he immediately understands that there must be a way to fix it, because we understand the way computers operate behind the scenes, the average joe does not. We’ve got a long way to go to get the ubiquity that we hope to achieve.

Metrics, metrics, metrics

Metrics, metrics, metrics

James McGovern threw me a bone in a recent post, and I’m more than happy to take it. In his post, “Why Enterprise Architects need to noodle metrics…” he asks:

Hopefully bloggers such as Robert McIlree, Scott Mark, Todd Biske and others would be willing to share not only successes within their own enterprise when it comes to metrics but also any unintended consequences in terms of collecting them.

I’m a big, big fan of instrumentation. One of the projects that I’m most proud of was when we built a custom application dashboard using JMX infrastructure (when JMX was in its infancy) for a pretty large web-based system. The people that used it really enjoyed the insight it gave them into the run-time operations of the system. I personally didn’t get to use it, as I was rolled onto another project, but the operations staff loved it. Interesting, my first example of metrics being useful comes from that project, but not from the run time management. It came from our automated build system. At the time, we had an independent contractor who was acting as a project management / technical architecture mentor. He would routinely visit the web page for the build management system and record the number of changed files for each build. This was a metric that the system captured for us, but no one paid much attention to it. He started posting graphs showing the number of changed files over time, and how we had spikes before every planned iteration release. He let us know that those spikes disappeared, we weren’t going live. Regardless of the number of defects logged, the significant amount of change before a release was a red flag for risk. This message did two things: first, it kept people from working to a date, and got them to just focus on doing their work at an appropriate pace. Secondly, I do think it helped up release a more stable product. Fewer changes meant more time for integration testing within the iteration.

The second area where metrics have come into play was the initial use of Web Services. I had response time metrics on every single web service request in the system. This became valuable for many reasons. First, because the thing collecting the new metrics was new infrastructure, everyone wanted to blame it when something went wrong. The metrics it collected easily showed that it wasn’t the source of any problem, and actually was a great tool in narrowing where possible problems were. The frustration switched more to the systems that didn’t have these metrics available because they were big, black boxes. Secondly, we caught some rogue systems. A service that typically had 200,000 requests per day showed up on Monday with over 3 million. It turns out a debugging tool had been written by a project team, but that tool itself had a bug and started flooding the system with requests. Nothing broke, but had we not had these metrics and someone looking at them, it eventually would have caused problems. This could have went undetected for weeks. Third, we saw trends. I looked for anything that was out of the norm, regardless of whether any user complained or any failures occurred. When the response time for a service had doubled over the course of two weeks, I asked questions because that shouldn’t happen. This exposed a memory leak that was fixed. When loads that had been stable for months started going up consistently for two weeks, I asked questions. A new marketing effort had been announced, resulting in increased activity for one service consumer. This marketing activity would have eventually resulted in loads that could have caused problems a couple months down the road, but we detected it early. An unintended consequence was a service that showed a 95% failure rate, yet no one was complaining. It turns out a SOAP fault was being used for a non-exceptional situation at the request of the consumer. The consuming app handled it fine, but the data said otherwise. Again, no problems in the system, but it did expose incorrect use of SOAP.

While these metrics may not all be pertinent to the EA, you really only know by looking at them. I’d much rather have an environment where metrics are universally available and the individuals can tailor the reporting and views to information they find pertinent. Humans are good at drawing correlations and detecting anomalies, but you need the data to do so. The collection of these metrics did not have any impact on the overall performance of the system, however, they were architected to ensure that. Metric collection should be performed as an out-of-band operation. As far the practice of EA is concerned, one metric that I’ve seen recommended is watching policy adherence and exception requests. If your rate of exception requests is not going down, you’re probably sitting off in an ivory tower somewhere. Exceptions requests shouldn’t be at zero, either, however, because then no one is pushing the envelope. Strategic change shouldn’t solely come from EA as sometimes the people in the trenches have more visibility into niche areas for improvement. Policy adherence is also needed to determine what policies are important. If there are policies out there that never even come up in a solution review, are they even needed?

The biggest risk I see with extensive instrumentation is not resource consumption. Architecting an instrumentation solution is not terribly difficult. The real risk is in not provided good analytics and reporting capabilities. It’s great to have the data, but if someone has to perform extracts to Excel or write their own SQL and graphing utilities, they can waste a lot of time that should be spent on other things. While access to the raw data lets you do any kind of analysis that you’d like, it can be a time-consuming exercise. It only gets worse when you show it to someone else, and they ask whether you can add this or that.